Learn from our AI Security Experts

Discover every model. Secure every workflow. Prevent AI attacks - without slowing innovation.

min read

Integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

Introduction

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

In this blog, we’ll walk through how this integration works, how to set it up in your Databricks environment, and how it fits naturally into your existing machine learning workflows.

Why You Need Automated Model Security

Modern machine learning models are valuable assets. They also present new opportunities for attackers. Whether you are deploying in finance, healthcare, or any data-intensive industry, models can be compromised with embedded threats or exploited during runtime. In many organizations, models move quickly from development to production, often with limited or no security inspection.

This challenge is addressed through HiddenLayer’s integration with Unity Catalog, which automatically scans every new model version as it is registered. The process is fully embedded into your workflow, so data scientists can continue building and registering models as usual. This ensures consistent coverage across the entire lifecycle without requiring process changes or manual security reviews.

This means data scientists can focus on training and refining models without having to manually initiate security checks or worry about vulnerabilities slipping through the cracks. Security engineers benefit from automated scans that are run in the background, ensuring that any issues are detected early, all while maintaining the efficiency and speed of the machine learning development process. HiddenLayer’s integration with Unity Catalog makes model security an integral part of the workflow, reducing the overhead for teams and helping them maintain a safe, reliable model registry without added complexity or disruption.

Getting Started: How the Integration Works

To install the integration, contact your HiddenLayer representative to obtain a license and access the installer. Once you’ve downloaded and unzipped the installer for your operating system, you’ll be guided through the deployment process and prompted to enter environment variables.

Once installed, this integration monitors your Unity Catalog for new model versions and automatically sends them to HiddenLayer’s Model Scanner for analysis. Scan results are recorded directly in Unity Catalog and the HiddenLayer console, allowing both security and data science teams to access the information quickly and efficiently.

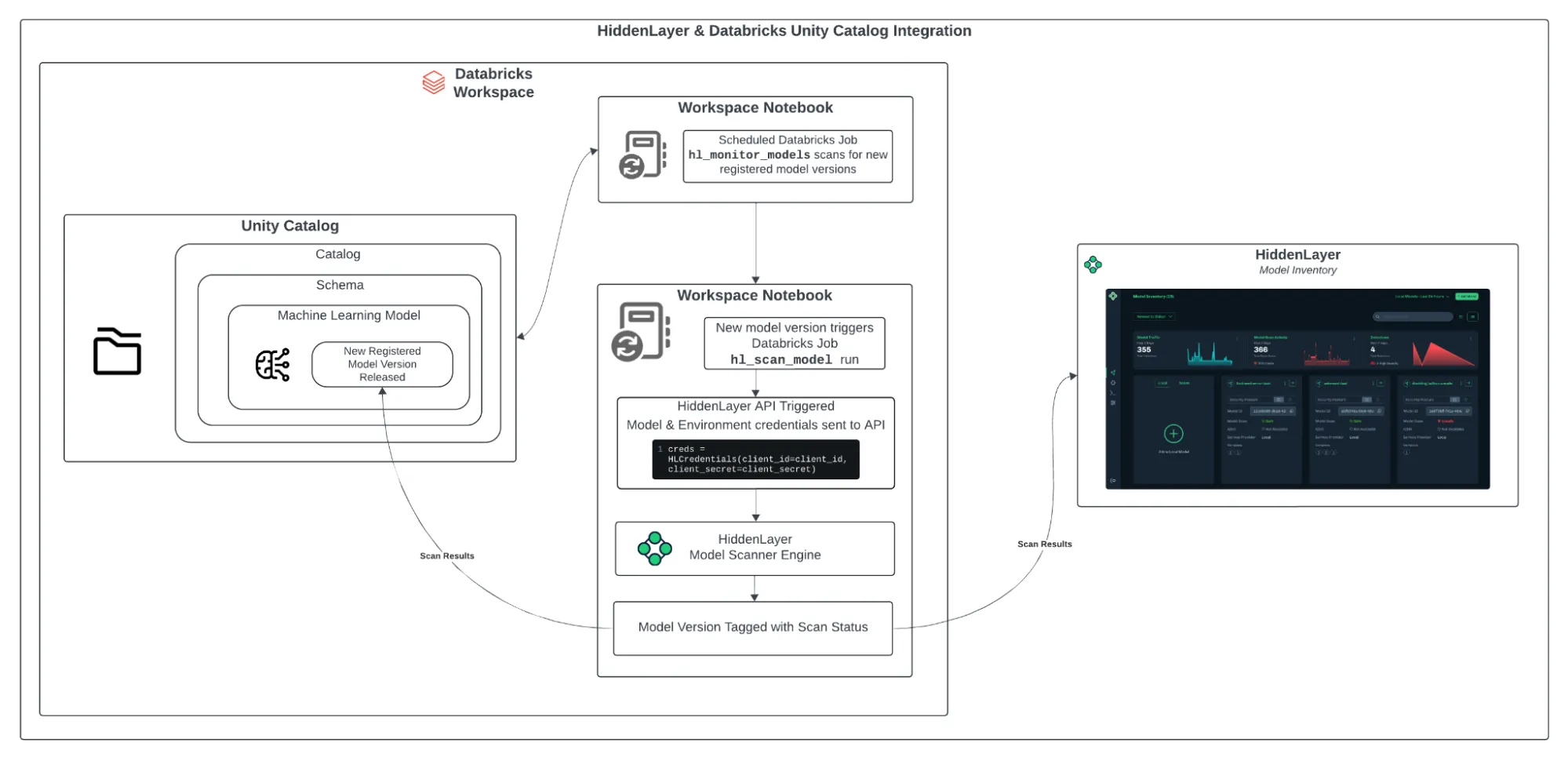

Figure 1: HiddenLayer & Databricks Architecture Diagram

The integration is simple to set up and operates smoothly within your Databricks workspace. Here’s how it works:

- Install the HiddenLayer CLI: The first step is to install the HiddenLayer CLI on your system. Running this installation will set up the necessary Python notebooks in your Databricks workspace, where the HiddenLayer Model Scanner will run.

- Configure the Unity Catalog Schema: During the installation, you will specify the catalogs and schemas that will be used for model scanning. Once configured, the integration will automatically scan new versions of models registered in those schemas.

- Automated Scanning: A monitoring notebook called hl_monitor_models runs on a scheduled basis. It checks for newly registered model versions in the configured schemas. If a new version is found, another notebook, hl_scan_model, sends the model to HiddenLayer for scanning.

- Reviewing Scan Results After scanning, the results are added to Unity Catalog as model tags. These tags include the scan status (pending, done, or failed) and a threat level (safe, low, medium, high, or critical). The full detection report is also accessible in the HiddenLayer Console. This allows teams to evaluate risk without needing to switch between systems.

Why This Workflow Works

This integration helps your team stay secure while maintaining the speed and flexibility of modern machine learning development.

- No Process Changes for Data Scientists

Teams continue working as usual. Model security is handled in the background. - Real-Time Security Coverage

Every new model version is scanned automatically, providing continuous protection. - Centralized Visibility

Scan results are stored directly in Unity Catalog and attached to each model version, making them easy to access, track, and audit. - Seamless CI/CD Compatibility

The system aligns with existing automation and governance workflows.

Final Thoughts

Model security should be a core part of your machine learning operations. By integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog, you gain a secure, automated process that protects your models from potential threats.

This approach improves governance, reduces risk, and allows your data science teams to keep working without interruptions. Whether you’re new to HiddenLayer or already a user, this integration with Databricks Unity Catalog is a valuable addition to your machine learning pipeline. Get started today and enhance the security of your ML models with ease.

All Resources

From National Security to Building Trust: The Current State of Securing AI

Consider this sobering statistic: 77% of organizations have been breached through their AI systems in the past year. With organizations deploying thousands of AI models, the critical role of these systems is undeniable. Yet, the security of these models is often an afterthought, brought into the limelight only in the aftermath of a breach, with the security team shouldering the blame.

The Stark Reality: Securing AI in Today's Organizations

Consider this sobering statistic: 77% of organizations have been breached through their AI systems in the past year. With organizations deploying thousands of AI models, the critical role of these systems is undeniable. Yet, the security of these models is often an afterthought, brought into the limelight only in the aftermath of a breach, with the security team shouldering the blame.

This oversight stems not from malice but from a communication complication. Too often, security is sidelined during the developmental phases of AI, constricting their safeguarding capabilities —a gap widened by organizational silos and a scarcity of resources dedicated to security for AI. Additionally, legislators and regulators are playing catch-up on what is needed to secure AI, making public trust a bit tricky.

This pivotal shift in public trust, as highlighted by the Edelman Trust Barometer Global Report 2024, marks a critical juncture in the discourse on AI governance. A two-to-one margin of respondents believe innovation is poorly managed, especially regarding “insufficient government regulation." The call for a more discerning examination of AI technologies becomes increasingly pressing, with skepticism leaning away from governmental oversight and tilting toward the corporate space. This evolving landscape of trust and skepticism sets the stage for a deeper exploration into how AI, particularly within national security, navigates the intricate balance between innovation and ethical responsibility.

Bridging Trust and Technology: The Role of AI in National Security

As we delve into national security, the focus sharpens on AI's transformative role. The shift in trust dynamics calls for reevaluating how these advanced technologies are integrated into our defense mechanisms and intelligence operations. At the intersection of societal trust and technological advancement, AI emerges as a pivotal force in reshaping our approach to national security.

- Intelligence & Surveillance: AI improves intelligence gathering by efficiently analyzing large data sets from sources like satellite imagery and digital communications, enhancing threat detection and prediction.

- Cyber Defense: AI strengthens cybersecurity by detecting and neutralizing advanced threats that bypass traditional defenses, protecting critical infrastructure.

- Decision Support: AI supports strategic decision-making in national security by merging data from various sources, enabling leaders to make informed, effective choices.

Securing AI: Embracing the Paradox

For Chief Information Security Officers (CISOs), the path forward is complex. It requires not only the integration of AI into security strategies but also a vigilant defense against AI-driven threats. This includes securing AI tools, staying updated on AI advancements, enhancing digital literacy, implementing resilient authentication protocols, and exploring adversarial AI research.

The integration of AI in national security strategies underscores the urgent need to shield these systems from potential exploitation. AI technologies' dual-use nature presents distinctive challenges, necessitating resilient security measures to avoid misuse.

- Security by Design: It is crucial to prioritize security from the initial stages of AI development. This involves safeguarding AI models, their data, and their operating infrastructure, facilitating early detection and remediation of vulnerabilities.

- Tailored Threat Intelligence for AI: Customizing threat intelligence to address AI-specific vulnerabilities is imperative. This demands a thorough understanding of the tactics, techniques, and procedures employed by adversaries targeting AI systems, from data poisoning to model exploitation, ensuring a proactive defense stance.

- Advocacy for Ethical and Transparent AI: Crafting ethical guidelines for AI's use in national security is vital. Promoting the transparency and audibility of AI decision-making processes is fundamental to sustaining public trust and ethical integrity.

Collaboration and knowledge sharing are critical. Engaging with the AI and cybersecurity communities, participating in joint exercises, and advocating for the ethical use of AI are essential steps toward a secure digital future.

For Chief Information Security Officers (CISOs), the path forward is complex. It requires not only the integration of AI into security strategies but also a vigilant defense against AI-driven threats.

The Challenges and Opportunities of Generative AI

Generative AI (GenAI) holds immense potential but is not without risks. From biased outputs to the creation of malicious content, the misuse of GenAI can have profound implications. The development of deepfakes and malicious actors' access to dangerous information highlights the urgent need for comprehensive security measures. Building strong collaborations between AI developers and security teams, conducting thorough evaluations of AI models, and tracing the origins of AI-generated content are vital steps in mitigating the risks associated with GenAI technologies. We have already seen such cases. Recently, malicious actors used AI to disguise themselves on a video conference call and defrauded 25.6 million USD transferred to them from a finance worker in Hong Kong, thinking it was a direct order from their CFO. Additionally, artificially generated Joe Biden robocalls are telling New Hampshire Democrats not to vote.

The ultimate goal remains evident in our collective journey toward securing AI: to foster trust and protect our national security. This journey is a reminder that in the vast and complex landscape of security for AI, the most crucial element is perhaps us—the humans behind the machines. It’s a call to action for every stakeholder involved, from the engineers crafting the algorithms to the policy makers shaping the guidelines and the security professionals safeguarding the digital frontiers.

The Human Factor: Our Role in the AI Ecosystem

Our relationship with AI is symbiotic; we shape its development, and in turn, it redefines our capabilities and challenges. This interdependence underscores the importance of a human-centric approach in securing AI, where ethical considerations, transparency, and accountability take center stage.

Cultivating a Culture of Security and Ethical AI

Creating a culture that prioritizes security and ethical considerations in AI development and deployment is paramount. This involves:

- Continuous Education and Awareness: Keeping up with the latest developments in AI and cybersecurity and understanding the ethical implications of AI technologies.

- Inclusive Dialogue: Fostering open discussions among all stakeholders, including technologists, ethicists, policymakers, and the general public, ensuring a balanced perspective on AI's role in society.

- Ethical Frameworks and Standards: Developing, expanding, and adhering to comprehensive ethical guidelines and standards for AI use, particularly in sensitive areas like national security.

To cultivate a culture that weaves security with ethical AI practices, we must emphasize an often-overlooked cornerstone: real-time, or runtime, security. Ethical AI frameworks guide us toward fairness and transparency, setting a high bar for behavior. However, these ethical pillars cannot withstand cyber threats’ dynamic and evolving landscape.

Ethical initiatives are commendable but remain incomplete without integrating resilient real-time security mechanisms. Vigilant, ongoing protection defends AI systems against relentless emerging threats. This symbiotic relationship between ethical integrity and security resilience is not just preferable—it’s critical. It ensures that AI systems not only embody ethical principles but also stand resilient against the tangible challenges of the real world, thus improving trust at every juncture of operation.

A Collaborative Path Forward

No single entity can tackle the challenges of securing AI alone. It requires a concerted effort from governments, corporations, academia, and civil society to:

- Strengthen International Cooperation: Collaborate on global standards and frameworks for security and ethics for AI, ensuring a unified approach to tackling AI threats.

- Promote Public-Private Partnerships: Leverage the strengths and resources of both the public and private sectors to enhance security for AI infrastructure and research.

- Empower Communities: Engage with local and global communities to raise awareness about security for AI and foster a culture of responsible AI use.

This collaboration is a united front that is not just about fortifying our defenses; it’s about shaping an ecosystem where security and ethical AI are intertwined, ensuring a resilient and trustworthy future.

Securing Together

Regarding AI and national security, the path is burdened with challenges but also overflowing with opportunities. The rapid advancements in AI offer unprecedented tools for safeguarding our nations and enhancing our capabilities. Yet, with great power comes great responsibility. It is crucial that we steer the course of AI development towards a secure, ethical, and inclusive future.

In this collective endeavor, the essence of our mission in securing AI becomes clear—it’s not just about securing algorithms and data but about safeguarding the very fabric of our societies. Ultimately, the journey to secure AI is a testament to our collective resolve to harness the power of technology for the greater good, underscored by the indispensable role of real-time security in realizing this vision.

Understanding the Threat Landscape for AI-Based Systems

To help understand the evolving cybersecurity environment, we developed HiddenLayer’s 2024 AI Threat Landscape Report as a practical guide to understanding the security risks that can affect every industry and to provide actionable steps to implement security measures at your organization.

To help understand the evolving cybersecurity environment, we developed HiddenLayer’s 2024 AI Threat Landscape Report as a practical guide to understanding the security risks that can affect every industry and to provide actionable steps to implement security measures at your organization.

These days, the conversation around AI often revolves around its safety and ethical uses. However, what's often overlooked is the security and safety of AI systems themselves. Just like any other technology, attackers can abuse AI-based solutions, leading to disruption, financial loss, reputational harm, or even endangering human health and life.

Three Major Types of Attacks on AI:

1. Adversarial Machine Learning Attacks:

These attacks target AI algorithms, aiming to alter their behavior, evade detection, or steal the underlying technology.

2. Generative AI System Attacks:

These attacks focus on bypassing filters and restrictions of AI systems to generate harmful or illegal content.

3. Supply Chain Attacks:

These attacks occur when a trusted third-party vendor is compromised, leading to the compromise of the product sourced from them.

Adversarial Machine Learning Attacks:

To understand adversarial machine learning attacks, let's first go over some basic terminology:

Artificial Intelligence: Any system that mimics human intelligence.

Machine Learning: Technology enabling AI to learn and improve its predictions.

Machine Learning Models: Decision-making systems at the core of most modern AI.

Model Training: Process of feeding data into a machine learning algorithm to produce a trained model.

Adversarial attacks against machine learning usually aim to alter the model's behavior, bypass or evade the model, or replicate the model or its data. These attacks include techniques like data poisoning, where the model's behavior is manipulated during training.

Data Poisoning:

Data poisoning attacks aim to modify the model's behavior. The goal is to make the predictions biased, inaccurate, or otherwise manipulated to serve the attacker’s purpose. Attackers can perform data poisoning in two ways: by modifying entries in the existing dataset or injecting the dataset with a new, specially doctored portion of data.

Model Evasion:

Model evasion, or model bypass, aims to manipulate model inputs to produce misclassifications. Adversaries repetitively query the model with crafted requests to understand its decision boundaries. These attacks have been observed in various systems, from spam filters to intrusion detection systems.

Model Theft:

Intellectual property theft, or model theft, is another motivation for attacks on AI systems. Adversaries may aim to steal the model itself, reconstruct training data, or create near-identical replicas. These attacks pose risks to both intellectual property and data privacy.

20% of IT leaders say their companies are planning and testing for model theft

Attacks Specific to Generative AI:

Generative AI systems face unique challenges, including prompt injection techniques that trick AI bots into performing unintended actions or code injection that allows arbitrary code execution.

Supply Chain Attacks:

Supply chain attacks exploit trust and reach, affecting downstream customers of compromised products. In the AI realm, vulnerabilities in model repositories, third-party contractors, and ML tooling introduce significant risks.

75% of IT leaders say that third-party AI integrations are riskier than existing threats

Wrapping Up:

Attacks on AI systems are already occurring, but the scale and scope remain difficult to assess due to limited awareness and monitoring. Understanding these threats is crucial for developing comprehensive security measures to safeguard AI systems and mitigate potential harms. As AI advances, proactive efforts to address security risks must evolve in parallel to ensure responsible AI development and deployment.

View the full Threat Landscape Report here.

Risks Related to the Use of AI

To help understand the evolving cybersecurity environment, we developed HiddenLayer’s 2024 AI Threat Landscape Report as a practical guide to understanding the security risks that can affect every industry and to provide actionable steps to implement security measures at your organization.

Part 1: A Summary of the AI Threat Landscape Report

To help understand the evolving cybersecurity environment, we developed HiddenLayer’s 2024 AI Threat Landscape Report as a practical guide to understanding the security risks that can affect every industry and to provide actionable steps to implement security measures at your organization.

As artificial intelligence (AI) becomes a household topic, it is both a beacon of innovation and a potential threat. While AI promises to revolutionize countless aspects of our lives, its misuse and unintended consequences pose significant threats to individuals and society as a whole.

Adversarial Exploitation of AI

The versatility of AI renders it susceptible to exploitation by various adversaries, including cybercriminals, terrorists, and hostile nation-states. Generative AI, in particular, presents a myriad of vulnerabilities:

- Manipulation for Malicious Intent: Adversaries can manipulate AI models to disseminate biased, inaccurate, or harmful information, perpetuating misinformation and propaganda, thereby undermining trust in information sources and distorting public discourse.

- Creation of Deepfakes: The creation of hyper-realistic deepfake images, audio, and video poses threats to individuals' privacy, financial security, and public trust, as malicious actors can leverage these deceptive media to manipulate perceptions and deceive unsuspecting targets.

In one of the biggest deepfake scams to date, adversaries were able to defraud a multinational corporation of $25 million. The financial worker who approved the transfer had previously attended a video conference call with what seemed to be the company's CFO, as well as a number of other colleagues the employee recognized. These all turned out to be deepfake videos.

- Privacy Concerns: Data privacy breaches are a significant risk associated with AI-based tools, with potential legal ramifications for businesses and institutions, as unauthorized access to sensitive information can lead to financial losses, reputational damage, and regulatory penalties.

- Copyright Violations: Unauthorized use of copyrighted materials in AI training datasets can lead to plagiarism and copyright infringements, resulting in legal disputes and financial liabilities, thereby necessitating robust mechanisms for ensuring compliance with intellectual property laws.

- Accuracy and Bias Issues: AI models trained on vast datasets may perpetuate biases and inaccuracies, leading to discriminatory outcomes and misinformation dissemination, highlighting the importance of continuous monitoring and mitigation strategies to address bias and enhance the fairness and reliability of AI systems.

The societal implications of AI misuse are profound and multifaceted:

Besides biased and inaccurate information, a generative AI model can also give advice that appears technically sane but can prove harmful in certain circumstances or when the context is missing or misunderstood

- Emotional AI Concerns: AI applications designed to recognize human emotions may provide advice or responses that lack context, potentially leading to harmful consequences in professional and personal settings. This underscores the need for ethical guidelines and responsible deployment practices to mitigate risks and safeguard users' well-being.

- Manipulative AI Chatbots: Malicious actors can exploit AI chatbots to manipulate individuals, spread misinformation, and even incite violence, posing grave threats to public safety and security, necessitating robust countermeasures and regulatory oversight to detect and mitigate malicious activities perpetrated through AI-powered platforms.

Looking Ahead

As AI continues to proliferate, addressing these risks comprehensively and proactively is imperative. Ethical considerations, legal frameworks, and technological safeguards must evolve in tandem with AI advancements to mitigate potential harms and safeguard societal well-being.

While AI holds immense promise for innovation and progress, acknowledging and mitigating its associated risks is crucial to harnessing its transformative potential responsibly. Only through collaborative efforts and a commitment to ethical AI development can we securely navigate the complex landscape of artificial intelligence.

View the full Threat Landscape Report here.

The Beginners Guide to LLMs and Generative AI

Large Language Models are quickly sweeping the globe. In a world driven by artificial intelligence (AI), Large Language Models (LLMs) are leading the way, transforming how we interact with technology. The unprecedented rise to fame leaves many reeling. What are LLM’s? What are they good for? Why can no one stop talking about them? Are they going to take over the world? As the number of LLMs grows, so does the challenge of navigating this wealth of information. That’s why we want to start with the basics and help you build a foundational understanding of the world of LLMs.

Introduction

Large Language Models are quickly sweeping the globe. In a world driven by artificial intelligence (AI), Large Language Models (LLMs) are leading the way, transforming how we interact with technology. The unprecedented rise to fame leaves many reeling. What are LLM’s? What are they good for? Why can no one stop talking about them? Are they going to take over the world? As the number of LLMs grows, so does the challenge of navigating this wealth of information. That’s why we want to start with the basics and help you build a foundational understanding of the world of LLMs.

What are LLMs?

So, what are LLMs? Large Language Models are advanced artificial intelligence systems designed to understand and generate human language. These models are trained on vast amounts of text data, enabling them to learn the patterns and nuances of language. The basic architecture of Large Language Models is based on transformers, a type of neural network architecture that has revolutionized natural language processing (NLP). Transformers are designed to handle sequential data, such as text, by processing it all at once rather than sequentially, as in traditional Neural Networks. Ultimately, these sophisticated algorithms, designed to understand and generate human-like text, are not just tools but collaborators, enhancing creativity and efficiency across various domains.

Here’s a brief overview of how LLMs are built and work:

- Transformers: Transformers consist of an encoder and a decoder. In the context of LLMs, the encoder processes the input text while the decoder generates the output. Each encoder and decoder layer in a transformer consists of multi-head self-attention mechanisms and position-wise fully connected feed-forward networks.

- Attention Mechanisms: Attention mechanisms allow transformers to focus on different parts of the input sequence when processing each word or token. This helps the model understand the context of the text better and improves its ability to generate coherent responses.

- Training Process: LLMs are typically pre-trained on large text corpora using unsupervised learning techniques. During pre-training, the model learns to predict the next word in a sequence given the previous words. This helps the model learn the statistical patterns and structures of language.

- Fine-tuning: After pre-training, LLMs can be fine-tuned on specific tasks or datasets to improve their performance on those tasks. Fine-tuning involves training the model on a smaller, task-specific dataset to adapt it to the specific requirements of the task.

Ultimately, the architecture and functioning of LLMs, based on transformers and attention mechanisms, have led to significant advancements in NLP, enabling these models to perform a wide range of language-related tasks with impressive accuracy and fluency.

LLMs In The Real World

Okay, so we know how they are built and work, but how are LLMs actually being used today? LLMs are currently being used in various applications in a myriad of industries thanks to their uncanny ability to understand and generate human-like text. Some of the key uses of LLMs today include:

- Chatbots: LLMs are used to power chatbots that can engage in natural language conversations with users. These chatbots are used in customer service, virtual assistants, and other applications where interaction with users is required.

- Language Translation: LLMs are used for language translation, enabling users to translate text between different languages accurately. This application is particularly useful for global communication and content localization.

- Content Generation: LLMs are used to generate content such as articles, product descriptions, and marketing copy. They can generate coherent and relevant text, making them valuable tools for content creators.

- Summarization: LLMs can be used to summarize long pieces of text, such as articles or documents, into shorter, more concise summaries. This application is helpful for quickly extracting key information from large amounts of text.

- Sentiment Analysis: LLMs can analyze text to determine the sentiment or emotion expressed in the text. This application is used in social media monitoring, customer feedback analysis, and other applications where understanding sentiment is important.

- Language Modeling: LLMs can be used as language models to improve the performance of other NLP tasks, such as speech recognition, text-to-speech synthesis, and named entity recognition.

- Code Generation: LLMs can be used to generate code for programming tasks, such as auto-completion of code snippets or even generating entire programs based on a description of the desired functionality.

These are just a few examples of the wide range of ways LLMs are leveraged today. From a company implementing an internal chatbot for its employees to advertising agencies using content creation to cultivate ads to a global company using language translation and summarization on cross-functional team communication - the uses of LLMs in a company are endless, and the potential for efficiency, innovation, and automation is limitless. As LLMs continue to progress and evolve, they will have even more unique purposes and adoptions in the future.

Where are LLMs Headed?

With all this attention on LLMs and what they are doing today, it is hard not to wonder where exactly LLMs are headed. Future trends in LLMs will likely focus on advancements in model size, efficiency, and capabilities. This includes the development of larger models, more efficient training processes, and enhanced capabilities such as improved context understanding and creativity. New applications, such as multimodal integration, the neural integration or combination of information from different sensory modalities, and continual learning, a machine learning approach that enables models to integrate new data without explicit retraining, are also expected to emerge, expanding the scope of what LLMs can achieve. While we can speculate on trends, the truth is that this technology could expand in ways that have not yet been seen. This kind of potential is unprecedented. That's something that makes this technology so invigorating - it is constantly evolving, shifting, and growing. Every day, there is something new to learn or understand about LLMs and AI in general.

Ethical Concerns Around LLMs

While LLMs may sound too good to be true, with the increase in efficiency, automation, and versatility that they bring to the table, they still have plenty of caution signs. LLMs can present bias. LLMs can exhibit bias based on the data they are trained on, which can lead to biased or unfair outcomes. This is a significant ethical concern, as biased language models can perpetuate stereotypes and discrimination. There are also ethical concerns related to the use of LLMs, such as the potential for misuse, privacy violations, and the impact on society. For example, LLMs could be used to generate fake news or misinformation, leading to social and political consequences. Another component that leaves some weary is data privacy. LLMs require large amounts of data to train effectively, which can raise privacy concerns, especially when sensitive or personal information is involved. Ensuring the privacy of data used to train LLMs is a critical challenge. So, while LLMs can provide many benefits, like competitive advantage, they should still be handled responsibly and with caution.

Efforts to address these ethical considerations, such as bias, privacy, and misuse, are ongoing. Techniques like dataset curation, bias mitigation, and privacy-preserving methods are being used to mitigate these issues. Additionally, there are efforts to promote transparency and accountability in the use of LLMs to ensure fair and ethical outcomes.

Ethical considerations surrounding Large Language Models (LLMs) are significant. Here's an overview of these issues and efforts to address them:

- Bias: LLMs can exhibit bias based on the data they are trained on, which can lead to biased or unfair outcomes. This bias can manifest in various ways, such as reinforcing stereotypes or discriminating against certain groups. Efforts to address bias in LLMs include:

- Dataset Curation: Curating diverse and representative datasets to train LLMs can help reduce bias by exposing the model to various perspectives and examples.

- Bias Mitigation Techniques: Techniques such as debiasing algorithms and adversarial training can be used to reduce bias in LLMs by explicitly correcting for biases in the data.

- Privacy: LLMs require large amounts of data to train effectively, raising concerns about the privacy of the data used to train these models. Privacy-preserving techniques such as federated learning, differential privacy, and secure multi-party computation can be used to address these concerns by ensuring that sensitive data is not exposed during training.

- Misuse: LLMs can be misused to generate fake news, spread misinformation, or engage in malicious activities. Efforts to address the misuse of LLMs include:

- Content Moderation: Implementing content moderation policies and tools to detect and prevent the spread of misinformation and harmful content generated by LLMs.

- Transparency and Accountability: Promoting transparency and accountability in the use of LLMs, including disclosing how they are trained and how their outputs are used.

- Fairness: Ensuring that LLMs are fair and equitable in their outcomes is another important ethical consideration. This includes ensuring that LLMs do not discriminate against individuals or groups based on protected characteristics such as race, gender, or religion.

- Content Moderation: Implementing content moderation policies and tools to detect and prevent the spread of misinformation and harmful content generated by LLMs.

To address these ethical considerations, researchers, developers, policymakers, and other stakeholders must collaborate to ensure that LLMs are developed and used responsibly. These concerns are a great example of how cutting-edge technology can be a double-edged sword when not handled correctly or with enough consideration.

Security Concerns Around LLMs

Ethical concerns aren’t the only things serving as a speed bump of generative AI adoption. Generative AI is the fastest technology ever adopted. Like most innovative technologies, adoption is paramount, while security is an afterthought. The truth is generative AI can be attacked by adversaries - just as any technology is vulnerable to attacks without security. Generative AI is not untouchable.

Here is a quick overview of how adversaries can attack generative AI:

- Prompt Injection: Prompt injection is a technique that can be used to trick an AI bot into performing an unintended or restricted action. This is done by crafting a special prompt that bypasses the model’s content filters. Following this special prompt, the chatbot will perform an action that its developers originally restricted.

- Supply Chain Attacks: Supply chain attacks occur when a trusted third-party vendor is the victim of an attack and, as a result, the product you source from them is compromised with a malicious component. Supply chain attacks can be incredibly damaging and far-reaching.

- Model Backdoors: Besides injecting traditional malware, a skilled adversary could also tamper with the model's algorithm in order to modify the model's predictions. It was demonstrated that a specially crafted neural payload could be injected into a pre-trained model and introduce a secret unwanted behavior to the targeted AI. This behavior can then be triggered by specific inputs, as defined by the attacker, to get the model to produce a desired output. It’s commonly referred to as a ‘model backdoor.’

Conclusion

As LLMs continue to push the boundaries of AI capabilities, it's crucial to recognize the profound impact they can have on society. They are not here to take over the world but rather lend a hand in enhancing the world we live in today. With their ability to shape narratives, influence decisions, and even create content autonomously - the responsibility to use LLMs ethically and securely has never been greater. As we continue to advance in the field of AI, it is essential to prioritize ethics and security to maximize the potential benefits of LLMs while minimizing their risks. Because as AI advances, so must we.

Securing Your AI System with HiddenLayer

Amidst escalating global AI regulations, including the European AI Act and Biden’s Executive AI Order, in addition to the release of recent AI frameworks by prominent industry leaders like Google and IBM, HiddenLayer has been working diligently to enhance its Professional Services to meet growing customer demand. Today, we are excited to bring upgraded capabilities to the market, offering customized offensive security evaluations for companies across every industry, including an AI Risk Assessment, ML Training, and, maybe most excitingly, our Red Teaming services.

HiddenLayer releases enhanced capabilities to their AI Risk Assessment, ML Training, and Red Teaming Services

Amidst escalating global AI regulations, including the European AI Act and Biden’s Executive AI Order, in addition to the release of recent AI frameworks by prominent industry leaders like Google and IBM, HiddenLayer has been working diligently to enhance its Professional Services to meet growing customer demand. Today, we are excited to bring upgraded capabilities to the market, offering customized offensive security evaluations for companies across every industry, including an AI Risk Assessment, ML Training, and, maybe most excitingly, our Red Teaming services.

What does Red Teaming mean for AI?

In cybersecurity, red teaming typically denotes assessing and evaluating an organization’s security posture through a series of offensive means. Of course, this may sound like a fancy way of describing a hacker, but in these instances, the red teamer is employed or contracted to perform this testing.

Even after the collective acceptance that AI will define this decade, implementing internal red teams is often only possible by industry giants such as Google, NVIDIA, and Microsoft - each being able to afford the dedicated resources to build internally focused teams to protect the models that they develop and those they put to work. Our offerings aim to expand this level of support and best practices to companies of all sizes through our ML Threat Operations team. This team partners closely with your data science and cybersecurity teams with the knowledge, insight, and tools needed to protect and maximize AI investments.

Advancements in AI Red Teaming

It would be unfair to mention these companies by name and not highlight some of their incredible work to bring light to the security of AI systems. In December 2021, Microsoft published its Best practices for AI security risk management. In June 2023, NVIDIA introduced its red team to the world alongside the framework it uses as the foundation for its assessments, and most recently, in July 2023, Google announced their own AI red team following the release of their Secure AI Framework (SAIF).

So what has turned the heads of these giants, and what’s stopping others from following suit?

Risk vs reward

The rapid innovation and integration of AI into our lives has far outpaced the security controls we’ve placed around it. With LLMs taking the world by storm over the last year, a growing awareness has manifested about the sheer scale of critical decisions being deferred to AI systems. The honeymoon period has started to wane, leaving an uncomfortable feeling that the systems we’ve placed so much trust in are inherently vulnerable.

Further, there continues to be a cybersecurity skills shortage. The shortage is even more acute for individuals at the intersection of AI and cybersecurity. Those with the necessary experience in both fields are often already employed by industry leaders and firms specializing in securing AI. This can mean that talent is hard to find yet harder to acquire.

To help address this problem, HiddenLayer provides our own ML Threat Operations team, specifically designed to help companies secure their AI and ML systems without having to find, hire, and grow their own bespoke AI security teams. We engage directly with data scientists and cybersecurity stakeholders to help them understand and mitigate the potential risks posed throughout the machine learning development lifecycle and beyond.

To achieve this, we have three distinct offerings specifically designed to give you peace of mind.

Securing AI Systems with HiddenLayer Professional Services

HiddenLayer Red Teaming

AI models are incredibly prone to adversarial attacks, including inference, exfiltration, and prompt injection, to name just a few modern attack types. An example of an attack on a digital supply chain can include an adversary exploiting highly weighted features in a model to game the system in the way it benefits them - for example, to push through fraudulent payments, force loan approvals, or evade spam/malware detection. We suggest reviewing the MITRE ATLAS framework for a more exhaustive listing of attacks.

AI red teaming is much like the well-known pentest but, in this instance, for models. It can also take many forms, from attacking the models themselves to evaluating the systems underneath and how they’re implemented. When understanding how your models fare under adversarial attack, the HiddenLayer ML Threat Operations team is uniquely positioned to provide invaluable insight. Taking known tactics and techniques published in frameworks such as MITRE ATLAS in addition to custom attack tooling, our team can work to assess the robustness of models against adversarial machine learning attacks.

HiddenLayer's red teaming assessment uncovers weaknesses in model classification that adversaries could exploit for fraudulent activities without triggering detection. Armed with a prioritized list of identified exploits, our client can channel their resources, involving data science and cyber teams, towards mitigation and remediation efforts with maximum impact. The result is an enhanced security posture for the entire platform without introducing additional friction for internal or external customers.

HiddenLayer AI Risk Assessment

When we think of attacking an AI system, the focal point is often the model that underpins it. Of course, this isn’t incorrect, but the technology stack that supports the model deployment and the model’s placement within the company’s general business context can be just as important, if not more important.

To help a company understand the security posture of their AI systems, we created the HiddenLayer AI Risk Assessment, a well-considered, comprehensive evaluation of risk factors from training to deployment and everything in between. We work closely with data science teams and cybersecurity stakeholders to discover and address vulnerabilities and security concerns and provide actionable insights into their current security posture. Regardless of organizational maturity, this evaluation has proved incredibly helpful for practitioners and decision-makers alike to understand what they’ve been getting wrong and what they’ve been getting right.

For a more in-depth understanding of the potential security risks of AI models, check out our past blogs on the topic, such as “The Tactics and Techniques of Adversarial ML.”

Adversarial ML Training

Numerous attack vectors in the overall ML operations lifecycle are still largely unknown to those tasked with building and securing these systems. A traditional penetration testing or red team may be on an engagement and run across machine learning systems or models and not know what to do with them. Alternatively, data science teams building models for their organizations may not follow best practices regarding AI hygiene security. For both teams, learning about the attack vectors and how they can be exploited can uplift their skills and improve their output.

The Adversarial ML Training is a multi-day course alternating between instruction and interactive hands-on lab assignments. Attendees will build up and train their own models to understand ML fundamentals before getting their hands dirty by attacking the models via inference-style attacks and injecting malware directly into the models themselves. Attendees can take these lessons back with an understanding of how to safely apply the principles within their organization.

Learn More

HiddenLayer believes that, as an industry, we can get ahead of securing AI. With decades of experience safeguarding our most critical technologies, the cybersecurity industry plays a pivotal role in shaping the solution. Securing your organization’s AI infrastructure does not have to be a daunting task. Our Professional Services team is here to help share how to test and secure your AI systems from end to end.

Learn more about how we can help you protect your advantage.

A Guide to Understanding New CISA Guidelines

Artificial intelligence (AI) is the latest, and one of the largest, advancements of technology to date. Like any other groundbreaking technology, the potential for greatness is paralleled only by the potential for risk. AI opens up pathways of unprecedented opportunity. However, the only way to bring that untapped potential to fruition is for AI to be developed, deployed, and operated securely and culpably. This is not a technology that can be implemented first and secured second. When it comes to utilizing AI, cybersecurity can no longer trail behind and play catch up. The time for adopting AI is now. The time for securing it was yesterday.

Artificial intelligence (AI) is the latest, and one of the largest, advancements of technology to date. Like any other groundbreaking technology, the potential for greatness is paralleled only by the potential for risk. AI opens up pathways of unprecedented opportunity. However, the only way to bring that untapped potential to fruition is for AI to be developed, deployed, and operated securely and culpably. This is not a technology that can be implemented first and secured second. When it comes to utilizing AI, cybersecurity can no longer trail behind and play catch up. The time for adopting AI is now. The time for securing it was yesterday.

https://www.youtube.com/watch?v=bja6hJZZrUU

We at HiddenLayer applaud the collaboration between CISA and the Australian Signals Directorate’s Australian Cyber Security Centre (ASD’s ACSC) on Engaging with Artificial Intelligence—joint guidance, led by ACSC, on how to use AI systems securely. The guidance provides AI users with an overview of AI-related threats and steps to help them manage risks while engaging with AI systems. The guidance covers the following AI-related threats:

- Data poisoning

- Input manipulation

- Generative AI hallucinations

- Privacy and intellectual property threats

- Model stealing and training data exfiltration

- Re-identification of anonymized data

The recommended remediation of following ‘secure design’ principles seems daunting since it requires significant resources throughout a system’s life cycle, meaning developers must invest in features, mechanisms, and implementation of tools that protect customers at each layer of the system design and across all stages of the development life cycle. But what if we told you there was an easier way to implement those secure design principles than dedicating your endless resources?

Luckily for those who covet AI's innovation while still wanting to remain secure on all fronts, HiddenLayer’s AI Security Platform plays a pivotal role in fortifying and ensuring the security of machine learning deployments. The platform comprises our Model Scanner and Machine Learning Detection and Response.

HiddenLayer Model Scanner ensures that models are free from adversarial code before entering corporate environments. The Model Scanner, backed by industry recognition, aligns with the guidance's emphasis on scanning models securely. By scanning a broader range of ML model file types and providing flexibility in deployment, HiddenLayer Model Scanner contributes to a secure digital supply chain. Organizations benefit from enhanced security, insights into model vulnerabilities, and the confidence to download models from public repositories, accelerating innovation while maintaining a secure ML operational environment.

Once models are deployed, HiddenLayer Machine Learning Detection and Response (MLDR) defends AI models against input manipulation, infiltration attempts, and intellectual property theft. Aligning with the collaborative guidance, HiddenLayer addresses threats such as model stealing and training data exfiltration. With automated processes, MLDR provides a proactive defense mechanism, detecting and responding to AI model breach attempts. The scalability and unobtrusive nature of MLDR's protection ensures that organizations can manage AI-related risks without disrupting their workflow, empowering security teams to identify and report various adversarial activities with Model Scanner.

Both MLDR and Model Scanner align with the collaborative guidance's objectives, offering organizations immediate and continuous real-time protection against cyber threats, supporting innovation in AI/ML deployment, and assisting in maintaining compliance by safeguarding against potential threats outlined in 3rd party frameworks such as MITRE ATLAS.

“Last year I had the privilege to participate in a task force led by the Center for Strategic International Studies (CSIS) to explore and make recommendations for CISA's evolving role to protect the .gov mission. CISA has done an excellent job in protecting our nation and our assets. I am excited to see once again CISA take a forward leaning position to protect AI which is quickly becoming embedded into the fabric of every application and every device. Our collective responsibility to protect our nation going forward requires all of us to make the leap to be ahead of the risks in front of us before harm occurs. To do this, we must all embrace and adopt the recommendations that CISA and the other agencies have made." said Malcolm Harkins, Chief Security & Trust Officer at HiddenLayer.

While it is tempting to hop on the AI bandwagon as quickly as possible, it is not responsible to do so without first securing it. That is why it is imperative to safeguard your most valuable asset from development to operation, and everything in between.

For more information on the CISA guidance, click here.

What SEC Rules Mean for your AI

On July 26th, 2023 the Securities and Exchange Commission (SEC) released its final rule on Cybersecurity Risk Management, Strategy, Governance, and Incident Disclosure. Organizations now have 5 months to craft and confirm a compliance plan before the new regulations go into effect mid-December. The revisions from these proposed rules aim to streamline the disclosure requirements in many ways. But what exactly are these SEC regulations requiring you to disclose, and how much? And does this apply to my organization’s AI?

Introduction

On July 26th, 2023 the Securities and Exchange Commission (SEC) released its final rule on Cybersecurity Risk Management, Strategy, Governance, and Incident Disclosure. Organizations now have 5 months to craft and confirm a compliance plan before the new regulations go into effect mid-December. The revisions from these proposed rules aim to streamline the disclosure requirements in many ways. But what exactly are these SEC regulations requiring you to disclose, and how much? And does this apply to my organization’s AI?

The Rules & The “So What?”

The new regulations will require registrants to disclose any cybersecurity incident they determine to be material and describe the material aspects of the nature, scope, and timing of the incident, as well as the material impact or reasonably likely material impact of the incident on the registrant, including its financial condition and results of operations. While also necessitating that “registrants must determine the materiality of an incident without unreasonable delay following discovery and, if the incident is determined material, file an Item 1.05 Form 8-K generally within four business days of such determination.” Something else to note is that “New Regulation S-K Item 106 will require registrants to describe their processes, if any, for assessing, identifying, and managing material risks from cybersecurity threats, as well as whether any risks from cybersecurity threats, including as a result of any previous cybersecurity incidents, have materially affected or are reasonably likely to materially affect the registrant.”

The word disclosure can be daunting. So what does “disclosing” really mean? Basically, companies must disclose any incident that affects the materiality of the company. Essentially, anything that affects what's important to your company, shareholders, or clients. The allotted time to disclose does not leave much time for dilly dallying as companies only have about 4 days to release this information. If the company fails to fit their disclosure in this time frame they are subject to heavy fines, penalties, and potentially an investigation. Another noteworthy thing to address is that companies now must describe their process for mitigating risk. Companies must have a plan stating not only their cybersecurity measures but also the action they will take if a breach occurs. In reality, many companies are not ready to lift the hood and expose their cyber capabilities underneath, especially in regards to the new threat landscape of the quickly growing AI sector.

In Regards to AI

These new rules mean that companies are now liable to report any adversarial attacks on their AI models. Not only do companies need a process for mitigating risk with models before they are deployed in their system, but they also need a process for mitigating and monitoring risks as the model is live. Despite AI’s new found stardom, it remains wildly under secured today. Companies are waiting for cybersecurity to catch up to AI instead of creating and executing a real, tangible security plan to protect their AI. The truth is, most companies are under prepared to showcase a security plan for their models. Many companies today are utilizing AI to create material benefit for the company. However, wherever a company is creating material benefit, they are also creating the risk of material damage, especially if that model being utilized is not secure. Because if the model is not secure (see figure 1.0 below) then it is not trustworthy. These SEC rules are saying we can no longer wait for cybersecurity to play catch up - the time to secure your AI models was yesterday.

Figure 1.0

Looking at figure 1.1 below, we can see that 76% of ML attacks have had a physical world impact, meaning that 76% of ML attacks affected the materiality of a company, their clients, and/or our society. This number is staggering. It is no surprise, looking at the data, that “Senate Majority Leader Chuck Schumer” is taking a step in the right direction by holding “a series of AI ‘Insight Forums’ to “lay down the foundation for AI policy.” These forums are to be held in September and October, “in place of congressional hearings that focus on senators’ questions, which Schumer said would not work for AI’s complex issues around finding a path towards AI legislation and regulation.” Due to the complexity of the issues being discussed and the vast amount of public noise around them, the “Senate meeting with top AI leaders will be ‘closed-door,’ no press or public allowed.” These forums emphasize the government's efforts at accelerating AI adoption in a secure manner, which is applaudable as the US should aim to secure its leadership position as we enter a new digital era.

Figure 1.1

Is It Enough?

While our government is moving in the right direction, there's still more to be done. Looking at this data we see that no one is as secure as they think they are. These attacks aren’t easy to brush under the rug as though they had no impact. A majority of attacks, 76%, directly impacted society in some way. And with the SEC rules going into effect, all of these attacks would now be required to be disclosed. Is your company ready? What are you doing now to secure your AI processes and deployed models?

The truth is there is still a ton of gray space surrounding security for AI. But it is no longer an issue that can be placed on the back burner to be answered later. As we see in this data, as we understand with these SEC rules, the time for securing our models was yesterday.

Where We Go From Here

HiddenLayer believes as an industry we can get ahead of securing AI, and with decades of experience safeguarding our most critical technologies, the cybersecurity industry plays a pivotal role in shaping the solution. HiddenLayer’s MLSec Platform consists of a suite of products that provide comprehensive Artificial Intelligence security to protect Enterprise Machine Learning Models against adversarial machine learning attacks, vulnerabilities, and malicious code injections. HiddenLayer’s patent-pending solution, MLDR, provides a noninvasive, software-based platform that monitors the inputs and outputs of your machine learning algorithms for anomalous activity consistent with adversarial ML attack techniques. It's detection and response capabilities support efforts to disclose in a timely manner

“Disclose” does not have to be a daunting word. It does not have to make companies nervous or uneasy. Companies can feel secure in their cybersecurity efforts, they can trust their ML models and AI processes. By implementing the right risk mitigation plan and covering all of their bases, companies can step into this new digital age feeling confident in the security and protection of their technological assets.

The Real Threats to AI Security and Adoption

AI is the latest, and likely one of the largest, advancements in technology of all time. Like any other new innovative technology, the potential for greatness is paralleled by the potential for risk. As technology evolves, so do threat actors. Despite how state-of-the-art Artificial Intelligence (AI) seems, we’ve already seen it being threatened by new and innovative cyber security attacks everyday.

AI is the latest, and likely one of the largest, advancements in technology of all time. Like any other new innovative technology, the potential for greatness is paralleled by the potential for risk. As technology evolves, so do threat actors. Despite how state-of-the-art Artificial Intelligence (AI) seems, we’ve already seen it being threatened by new and innovative cyber security attacks everyday.

When we hear about AI in terms of security risks, we usually envision a superhuman AI posing an existential threat to humanity. This very idea has inspired countless dystopian stories. However, as things stand, we are not yet close to inventing a truly conscious AI and there’s a real opportunity to use AI to our advantage; working better for us, not against us. Instead of focusing on sci-fi scenarios, we believe we should pay much more attention to the opportunities that AI will provide society as a whole and find ways to protect against a more pressing risk – the risk of humans attacking AI.

Academic research studies have already proven that machine learning is susceptible to attack. For example, many products such as web applications, mobile apps, or embedded devices share their entire Machine Learning (ML) model with the end-user, causing their ML/AI solutions to be vulnerable to a wide range of abuse. However, awareness of the security risks faced by ML/AI has barely spread outside of academia, and stopping attacks is not yet within the scope of today’s cyber security products. Meanwhile, cyber-criminals are already getting their hands dirty conducting novel attacks to abuse ML/AI for their own gain. This is why it is so alarming that, in a Forrester Consulting study commissioned by HiddenLayer, 40% -52% of respondents were either using a manual process to address these mounting threats or they were still discussing how to even address such threats. It’s no wonder that in that same report, 86% of respondents were ‘extremely concerned or concerned’ about their organization's ML/AI model security.

The reasons for attacking ML/AI are typically the same as any other kind of cyber attack, the most relevant being:

- Financial gain

- Securing a competitive advantage

- Hurting competitors

- Manipulating public opinion

- Bypassing security solutions

The truth is, society’s current lack of ML/AI security is drastically hurting our ability to reach the full potential and efficiency of AI powered tools. Because if we are being honest, we know manual processes are simply no longer sustainable. To continue to maximize time and effort and utilize cutting edge technology, AI processes are vital to any growing organization.

Figure 1.1

As we can see in the figure above, cyber risk is increasing at an accelerated rate given where the control line sits. Looking at this we understand the complicated position companies currently reside in. To say yes to efficiency, innovation, and better performance for the company as a whole is to also say yes to an alarming amount of risk. Saying yes to this risk can mean the possibility of hurting a corporation's materiality and respectability. As a shareholder, board member, or executive, how can we say no to the progress of business? But how can we say yes to that much risk?

However it is not a complete doomsday.

Fortunately, HiddenLayer has over thirty years of developing protection and prevention solutions for cyber attacks. That means we do not have to impede the growth of AI as we ensure it's secure. HiddenLayer and DataBricks have partnered together to merge AI and cybersecurity tools into a single stack ensuring security is built into AI from the Lakehouse.

Databricks Lakehouse Platform enables data science teams to design, develop, and deploy their ML Models rapidly while HiddenLayer MLSec Platform provides comprehensive security to protect, preserve, detect, and respond to Adversarial Machine Learning attacks on those models. Together, the two solutions empower companies to rapidly and securely deliver on their mission to advance their Artificial Intelligence strategy.

Incorporating security into machine learning operations is critical for data science teams. With the increasing use of machine learning models in sensitive areas such as healthcare, finance, and national security, it is essential to ensure that machine learning models are secure and protected against malicious attacks. By embedding security throughout the entire machine learning lifecycle, from data collection to deployment, companies can ensure that their models are reliable and trustworthy.

Cyber protection does not have to trail behind technological advancement, playing catch up later down the line as risks continue to multiply and increase in complexity. Instead, if cybersecurity is able to maintain pace with the advancement of technology it could serve as a catalyst for adoption of AI for corporations, government and society as a whole. Because at the end of the day, the biggest threat to continued AI adoption is cybersecurity itself.

For more information on the Databricks and HiddenLayer solution please visit: HiddenLayer Partners with Databricks.

A Beginners Guide to Securing AI for SecOps

Artificial Intelligence (AI) and Machine Learning (ML), the most common application of AI, are proving to be a paradigm-shifting technology. From autonomous vehicles and virtual assistants to fraud detection systems and medical diagnosis tools, practically every company in every industry is entering into an AI arms race seeking to gain a competitive advantage by utilizing ML to deliver better customer experiences, optimize business efficiencies, and accelerate innovative research.

Introduction

Artificial Intelligence (AI) and Machine Learning (ML), the most common application of AI, are proving to be a paradigm-shifting technology. From autonomous vehicles and virtual assistants to fraud detection systems and medical diagnosis tools, practically every company in every industry is entering into an AI arms race seeking to gain a competitive advantage by utilizing ML to deliver better customer experiences, optimize business efficiencies, and accelerate innovative research.

CISOs, CIOs, and their cybersecurity operation teams are accustomed to adapting to the constant changes to their corporate environments and tech stacks. However, the breakneck pace of AI adoption has left many organizations struggling to put in place the proper processes, people, and controls necessary to protect against the risks and attacks inherent to ML.

According to a recent Forrester report, "It's Time For Zero Trust AI," a majority of decision-makers responsible for AI Security responded that Machine Learning (ML) projects will play a critical or important role in their company’s revenue generation, customer experience and business operations in the next 18 months. Alarmingly though, the majority of respondents noted they currently rely on manual processes to address ML model threats and 86% of respondents were ‘extremely concerned or concerned’ about their organization's ML model security. To address this challenge, a majority of respondents expressed interest to invest in a solution that manages ML model integrity and security within the next 12 months.

In this blog, we will delve into the intricacies of Security for AI and its significance in the ever-evolving threat landscape. Our goal is to provide security teams with a comprehensive overview of the key considerations, risks, and best practices that should be taken into account when securing AI deployments within their organizations.

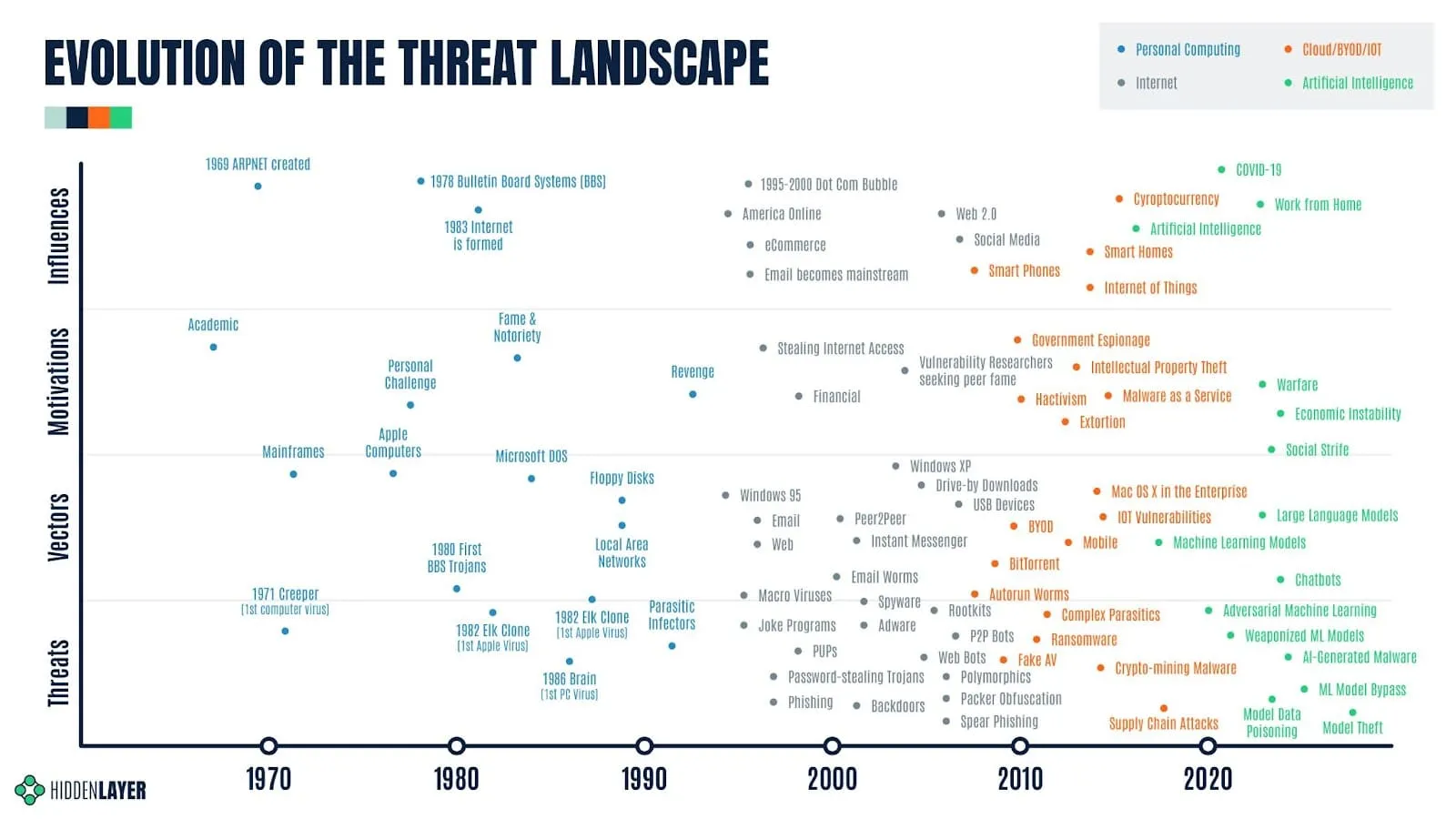

Before we deep dive into Security for AI, let’s first take a step back and look at the evolution of the cybersecurity threat landscape through its history to understand what is similar and different about AI compared to past paradigm-shifting moments.

Personal Computing Era - The Digital Revolution (aka the Third Industrial Revolution) ushered in the era of the Information Age and gave us mainframe computers, servers, and personal computing available to the masses. It also introduced the world to computer viruses and Trojans, making anti-virus software one of the founding fathers of cybersecurity products.

Internet Era - The Internet then opened a Pandora’s Box of threats to the new digital world, bringing with it computer worms, spam, phishing attacks, macro viruses, adware, spyware, password-stealing Trojans just to name a few. Many of us still remember the sleepless nights from the Virus War of 2004. Anti-virus could no longer keep up spawning new cybersecurity solutions like Firewalls, VPNs, Host Intrusion Prevention, Network Intrusion Prevention, Application Control, etc.

Cloud, BYOD, & IOT Era - Prior to 2006, the assets and data that most security teams needed to protect were primarily confined within the corporate firewall and VPN. Cloud computing, BYOD, and IOT changed all of that and decentralized corporate data, intellectual property, and network traffic. IT and Security Operations teams had to adjust to protecting company assets, employees, and data scattered all over the real and virtual world.

Artificial Intelligence Era - We are now at the doorstep of a new significant era thanks to artificial intelligence. Although the concept of AI has been a storytelling device in science fiction and academic research since the early 1900s, its real-world application wasn’t possible until the turn of the millennia. After OpenAI launched ChatGPT and it said hello to the world on November 30, 2022, AI became a dinner conversation in practically every household across the globe.

The world is still debating what impact AI will have on economic and social issues, but there is one thing that is not debatable - AI will bring with it a new era of cybersecurity threats and attacks. Let’s delve into how AI/ML works and how security teams will need to think about things differently to protect it.

How does Machine Learning Work?

Artificial Intelligence (AI) and Machine Learning (ML) are sometimes used interchangeably, which can cause confusion to those who aren’t data scientists. Before you can understand ML, you first need to understand the field of AI.

AI is a broad field that encompasses the development of intelligent machines capable of simulating human intelligence and performing tasks that typically require human intelligence, such as problem-solving, decision-making, perception, and natural language understanding.

ML is a subset of AI that focuses on algorithms and statistical models that enable computer systems to automatically learn and improve from experience without being explicitly programmed. ML algorithms aim to identify patterns and make predictions or decisions based on data. The majority of AI we deal with today are ML models.

Now that we’ve defined AI & ML, let’s dive into how a Machine Learning Model is created.

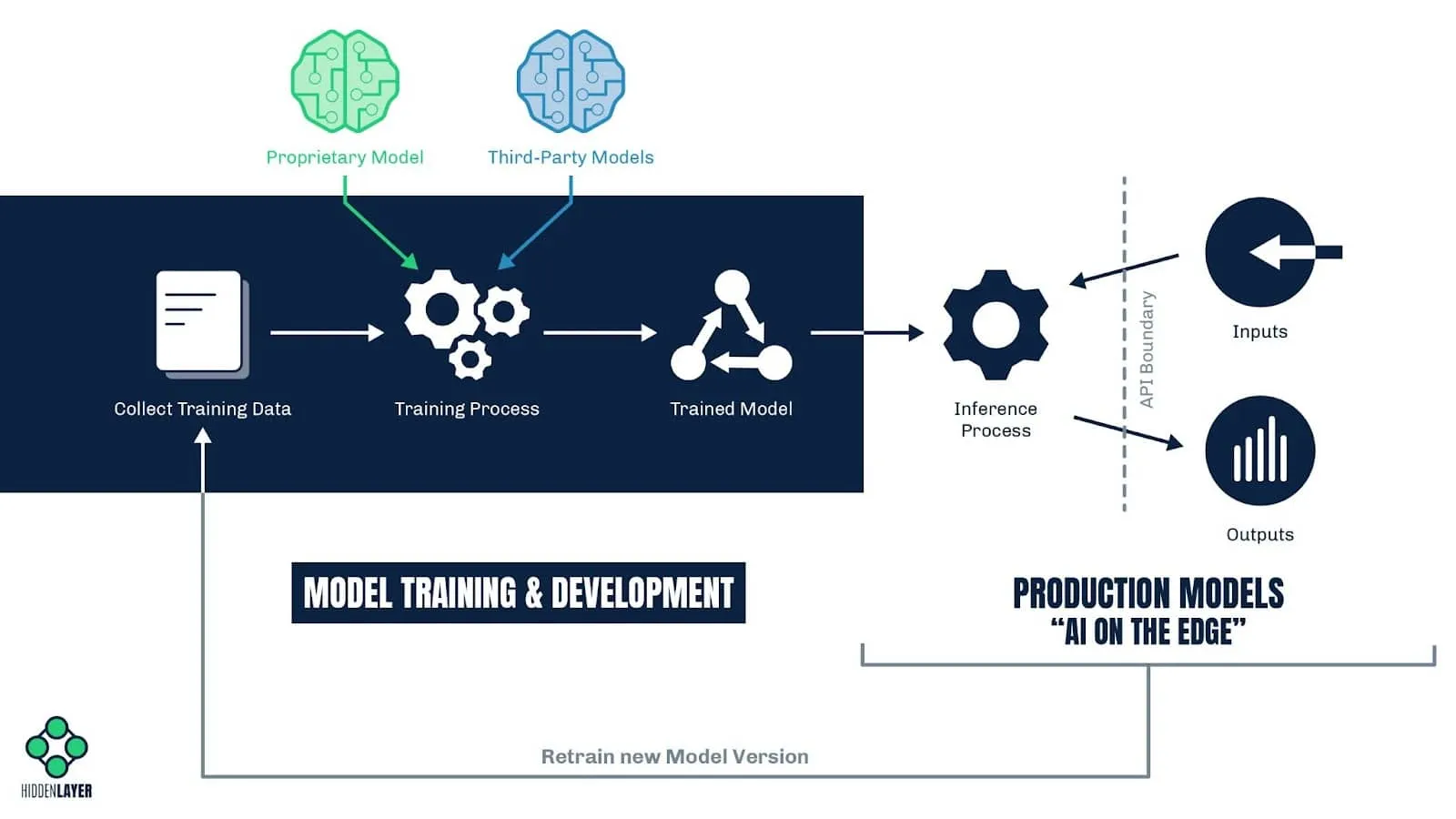

MLOps Lifecycle

Machine Learning Operations (MLOps) are the set of processes and principles for managing the lifecycle of the development and deployment of Machine Learning Models. It is now important for cybersecurity professionals to understand MLOps just as deeply as IT Operations, DevOps, and HR Operations.

Model Training & Development

Collect Training Data - With an objective in mind, data science teams begin by collecting large swaths of data used to train their machine learning model(s) to achieve their goals. The quality and quantity of the data will directly influence the intent and accuracy of the ML Model. External and internal threat actors could introduce poisoned data to undermine the integrity and efficacy of the ML model.

Training Process - When an adequate corpus of training data has been compiled, data scientist teams will start the training process to develop their ML Models. They typically source their ML models in two ways:

- Proprietary Models: Large enterprise corporations or startup companies with unique value propositions may decide to develop their own ML models from scratch and encase their intellectual property within proprietary models. ML vulnerabilities could allow inference attacks and model theft, jeopardizing the company’s intellectual property.

- Third-Party Models: Companies trying to bootstrap their adoption of AI may start by utilizing third-party models from open-source repositories or ML Model marketplaces like HuggingFace, Kaggle, Microsoft Azure ML Marketplace, and others. Unfortunately, security controls on these forums are limited. Malicious and vulnerable ML Models have been found in these repositories, making the ML Model an attack vector into a corporate environment.

Trained Model - Once the Data Science team has trained their ML model to meet their success criteria and initial objectives, they make the final decision to prepare the ML Model for release into production. This is the last opportunity to identify and eliminate any vulnerabilities, malicious code, or integrity issues to minimize risks the ML Model may pose once in production and accessible by customers or the general public.

ML Security (MLSec)

As you can see, AI introduces a paradigm shift in traditional security approaches. Unlike conventional software systems, AI algorithms learn from data and adapt over time, making them dynamic and less predictable. This inherent complexity amplifies the potential risks and vulnerabilities that can be exploited by malicious actors.

Luckily, as cybersecurity professionals, we’ve been down this route before and are more equipped than ever to adapt to new technologies, changes, and influences on the threat landscape. We can be proactive and apply industry best practices and techniques to protect our company’s machine learning models. Now that you have an understanding of ML Operations (similar to DevOps), let’s explore other areas where ML Security is similar and different compared to traditional cybersecurity.

- Asset Inventory - As the saying goes in cybersecurity, “you can’t protect what you don’t know.” The first step in a successful cybersecurity operation is having a comprehensive asset inventory. Think of ML Models as corporate assets to be protected.

ML Models can appear among a company’s IT assets in 3 primary ways:

- Proprietary Models - the company’s data science team creates its own ML Models from scratch

- Third-Party Models - the company’s R&D organization may derive ML Models from 3rd party vendors or open source or simply call them using an API

- Embedded Models - any of the company’s business units could be using 3rd party software or hardware that have ML Models embedded in their tech stack, making them susceptible to supply chain attacks. There is an ongoing debate within the industry on how to best provide discovery and transparency of embedded ML Models. The adoption of Software Bill of Materials (SBOM) to include AI components is one way. There are also discussions of an AI Bill of Materials (AI BOM).

- File Types - In traditional cybersecurity, we think of files such as portable executables (PE), scripts, macros, OLE, and PDFs as possible attack vectors for malicious code execution. ML Models are also files, but you will need to learn the file formats associated with ML such as pickle, PyTorch, joblib, etc.

- Container Files - We’re all too familiar with container files like zip, rar, tar, etc. ML Container files can come in the form of Zip, ONNX, and HDF5.

- Malware - Since ML Models can present themselves in a variety of forms with data storage and code execution capabilities, they can be weaponized to host malware and be an entry point for an attack into a corporate network. It is imperative that every ML Model coming in and out of your company be scanned for malicious code.

- Vulnerabilities - Due to the various libraries and code embedded in ML Models, they can have inherent vulnerabilities such as backdoors, remote code execution, etc. We predict the volume of reported vulnerabilities in ML will increase at a rapid pace as AI becomes more ubiquitous. ML Models should be scanned for vulnerabilities before releasing into production.

- Risky downloads - Traditional file transfer and download repositories such as Bulletin Board Systems (BBS), Peer 2 Peer (P2P) networks, and torrents were notorious for hosting malware, adware, and unwanted programs. Third-party ML Model repositories are still in their infancy but gaining tremendous traction. Many of their terms and conditions release them of liability with a “Use at your own risk” stance.

- Secure Coding - ML Models are code and are just as susceptible to supply chain attacks as traditional software code. A recent example is the PyPi Package Hijacking carried out through a phishing attack. Therefore traditional secure coding best practices and audits should be put in place for ML Models within the MLOps Lifecycle.

- AI Red Teaming - Similar to Penetration Testing and traditional adversarial red teaming, the purpose of AI Red Teaming is to assess a company’s security posture around its AI assets and ML operations.

- Real-Time Monitoring - Visibility and monitoring for suspicious behavior on traditional assets are crucial in Security Operations. ML Models should be treated in the same manner with early warning systems in place to respond to possible attacks on the models. Unlike traditional computing devices, ML Models can initially seem like a black box with seemingly indecipherable activity. HiddenLayer MLSec Platform provides the visibility and real-time insights to secure your company’s AI.

- ML Attack Techniques - Cybersecurity attack techniques on traditional IT assets grew and evolved through the decades with each new technological era. AI & ML usher in a whole new category of attack techniques called Adversarial Machine Learning (AdvML). Our SAI Research Team goes into depth on this new frontier of Adversarial ML.