AI Guardrails for Safe and Compliant AI

Enforce safety, compliance, and policy boundaries across LLMs, agents, and AI applications.

Trusted by Industry Leaders

.svg)

AI outputs must stay safe, consistent, and policy aligned

Enterprises need predictable and compliant AI behavior. Without guardrails, LLMs and agents can generate harmful content, leak sensitive data, violate policy, or behave inconsistently across applications.

AI systems need standardized behavioral controls

Teams struggle to enforce consistent rules for tone, safety, compliance, and restricted topics across departments and applications.

LLM outputs can unintentionally reveal sensitive data

Without safety filters and real time checks, generative systems may produce PII, PHI, or proprietary information.

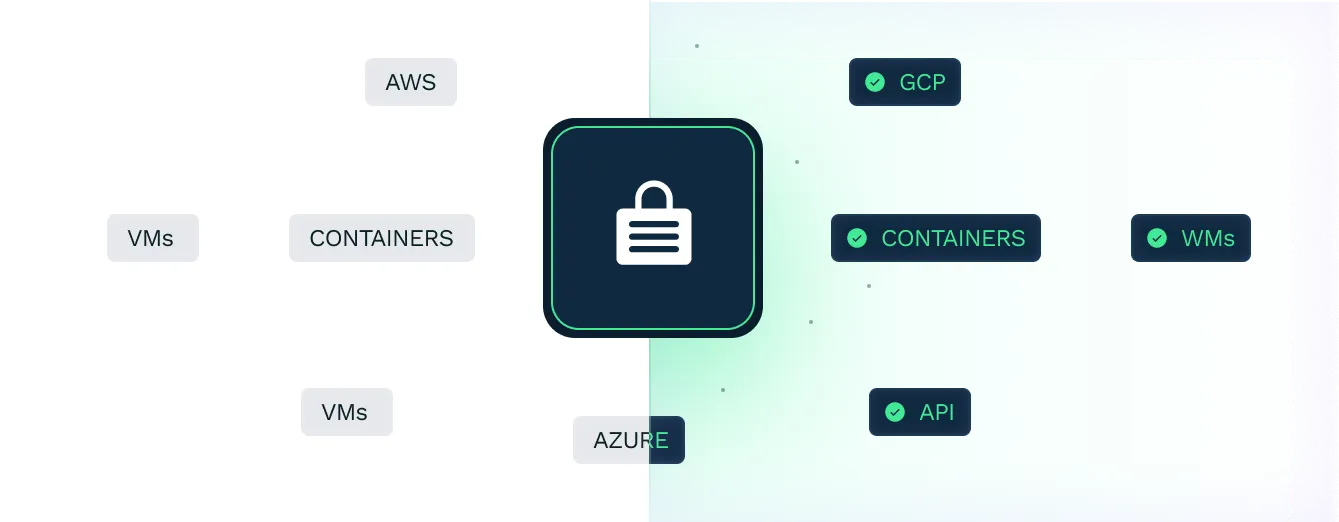

Guardrails that enforce safe use of AI at enterprise-scale

Output Safety and Content Controls

Enforce boundaries around content, toxicity, bias, and regulatory restrictions.

Policy Driven Response Shaping

Ensure responses comply with security policies, including protection against prompt injection, data leakage, and sensitive information exposure.

Sensitive Data Protection

Detect and prevent PII, PHI, and proprietary data exposure in generated content.

.webp)

MCP and Framework Traffic Inspection

Combine safety guardrails with real time threat detection and prompt injection blocking.

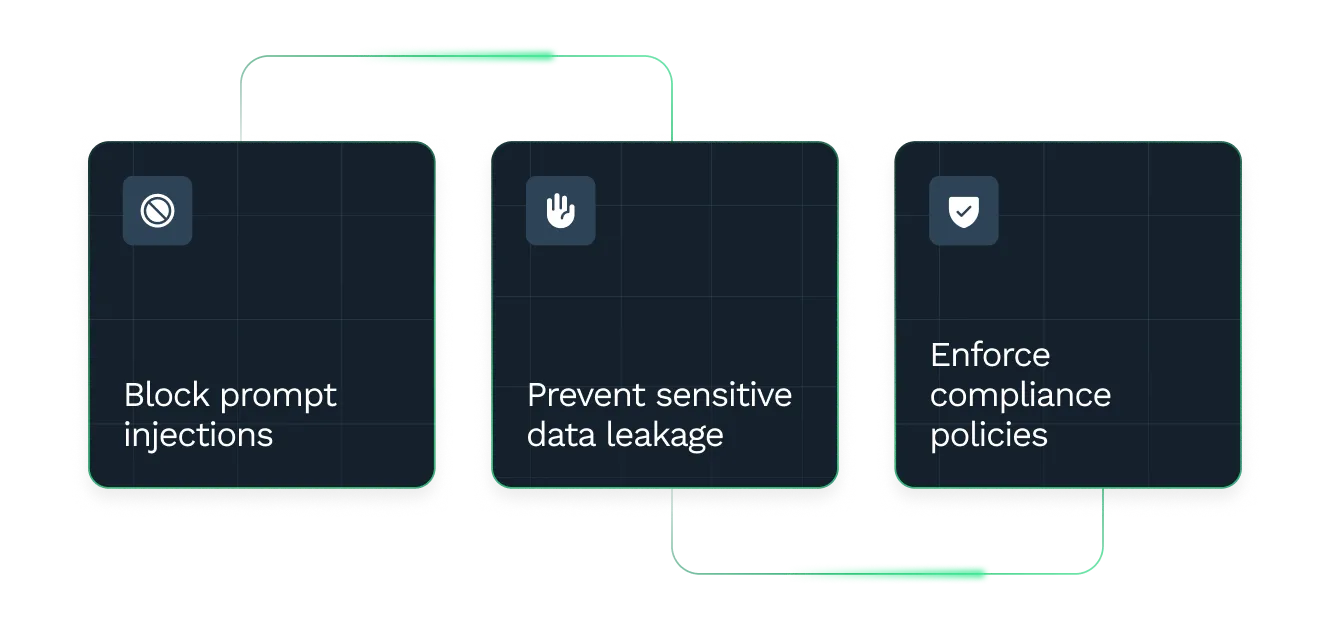

Safe, predictable, and policy aligned AI

Enterprise control for both agentic and generative AI systems.

Standardize safe use of AI

Apply consistent policies across business units.

Prevent sensitive data leakage

Detect and redact PII and protected information.

Ensure compliant responses

Enforce legal, regulatory, and brand requirements.

Strengthen defenses

Combine safety guardrails with prompt attack detection.

Maintain trust and quality

Support for leading frameworks including OpenAI, AWS Bedrock, and MCP based systems.Deliver reliable outputs that users can depend on.

Learn from the Industry’s AI Security Experts

Research, guidance, and frameworks from the team shaping AI security standards.

min read

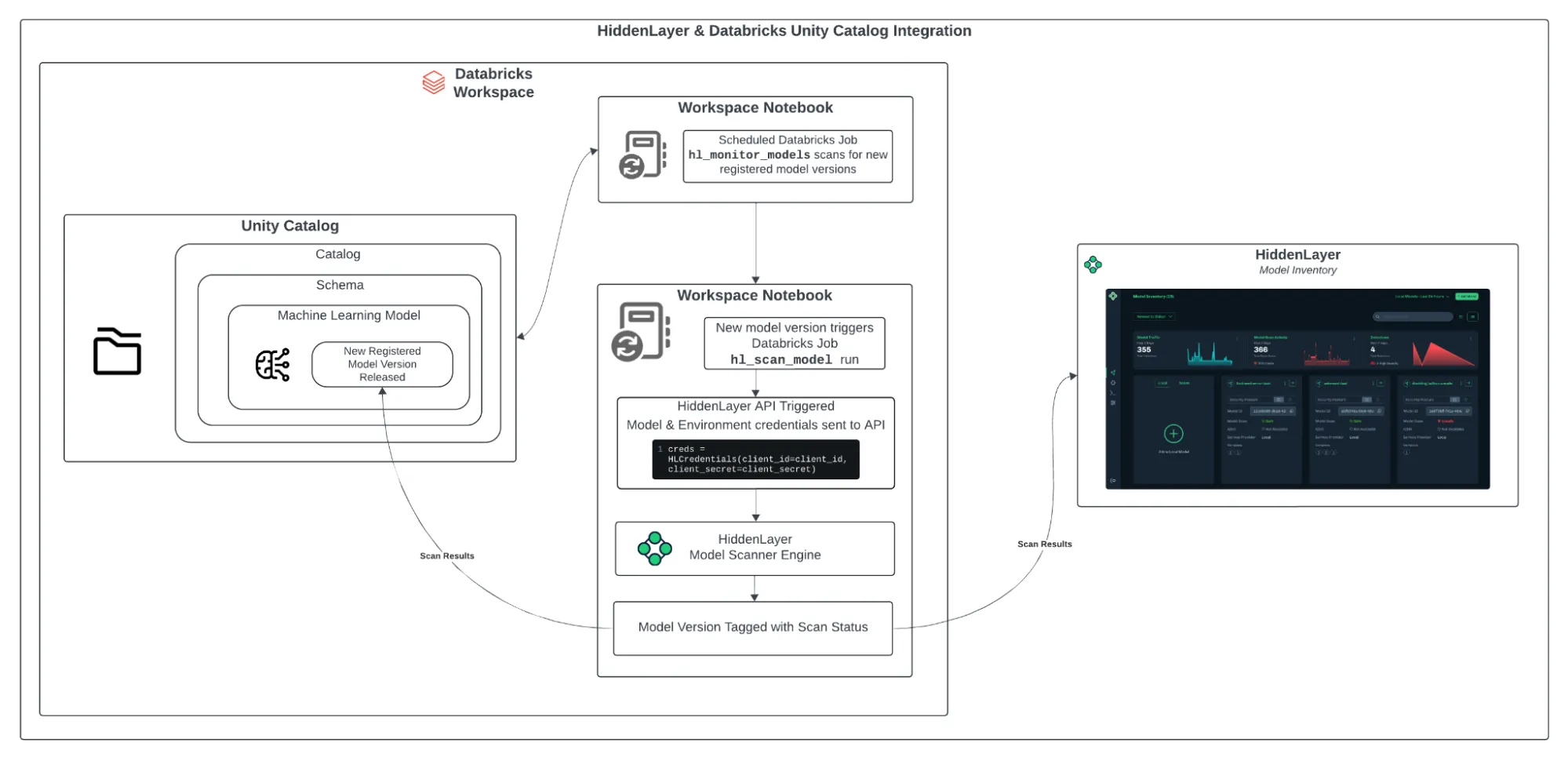

Integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

Enforce AI behavior

Implement enterprise grade guardrails for safe and compliant AI.

.svg)

.svg)

.svg)

.svg)

.svg)