Case Study

min read

Integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

Introduction

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

In this blog, we’ll walk through how this integration works, how to set it up in your Databricks environment, and how it fits naturally into your existing machine learning workflows.

Why You Need Automated Model Security

Modern machine learning models are valuable assets. They also present new opportunities for attackers. Whether you are deploying in finance, healthcare, or any data-intensive industry, models can be compromised with embedded threats or exploited during runtime. In many organizations, models move quickly from development to production, often with limited or no security inspection.

This challenge is addressed through HiddenLayer’s integration with Unity Catalog, which automatically scans every new model version as it is registered. The process is fully embedded into your workflow, so data scientists can continue building and registering models as usual. This ensures consistent coverage across the entire lifecycle without requiring process changes or manual security reviews.

This means data scientists can focus on training and refining models without having to manually initiate security checks or worry about vulnerabilities slipping through the cracks. Security engineers benefit from automated scans that are run in the background, ensuring that any issues are detected early, all while maintaining the efficiency and speed of the machine learning development process. HiddenLayer’s integration with Unity Catalog makes model security an integral part of the workflow, reducing the overhead for teams and helping them maintain a safe, reliable model registry without added complexity or disruption.

Getting Started: How the Integration Works

To install the integration, contact your HiddenLayer representative to obtain a license and access the installer. Once you’ve downloaded and unzipped the installer for your operating system, you’ll be guided through the deployment process and prompted to enter environment variables.

Once installed, this integration monitors your Unity Catalog for new model versions and automatically sends them to HiddenLayer’s Model Scanner for analysis. Scan results are recorded directly in Unity Catalog and the HiddenLayer console, allowing both security and data science teams to access the information quickly and efficiently.

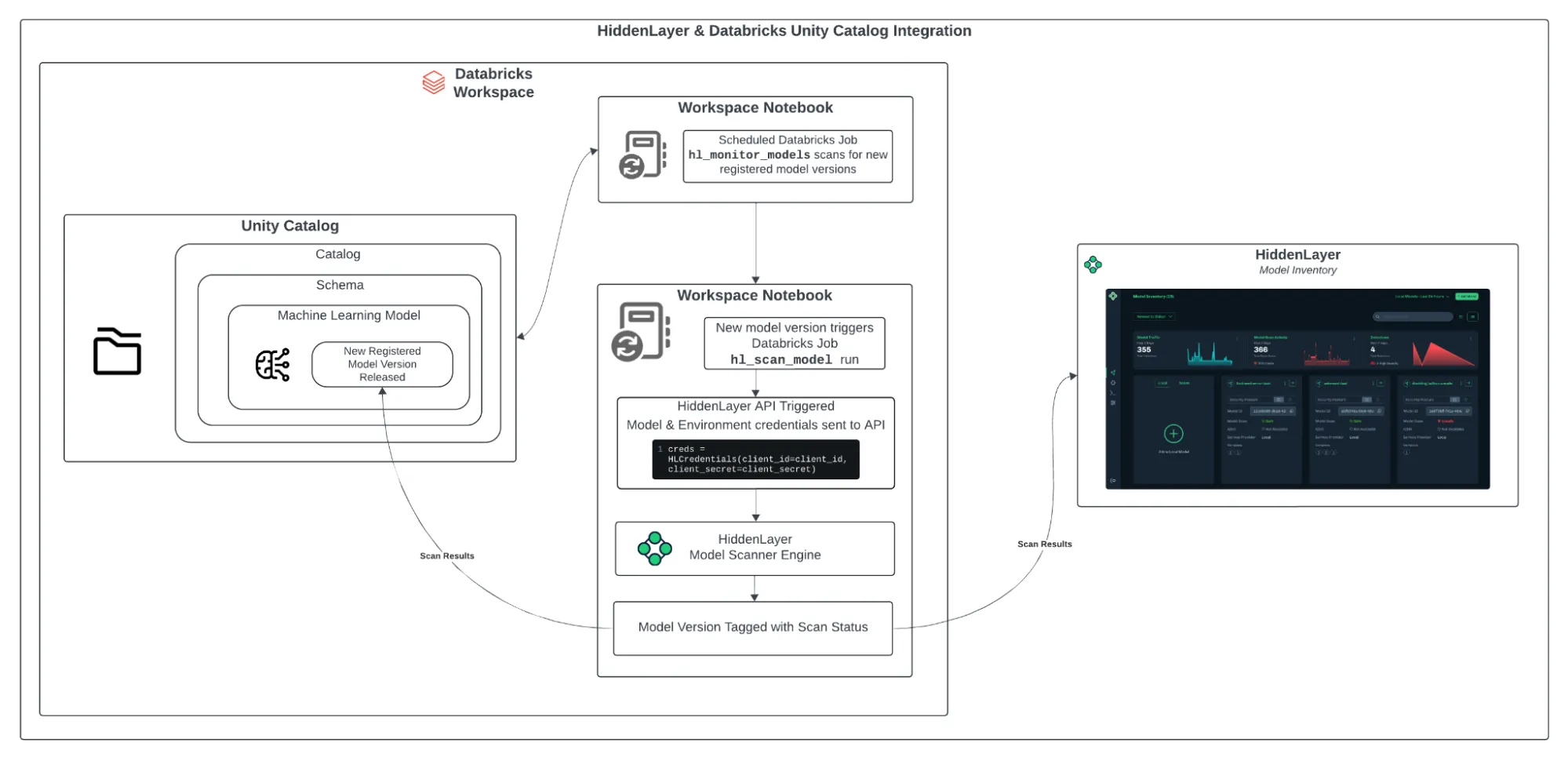

Figure 1: HiddenLayer & Databricks Architecture Diagram

The integration is simple to set up and operates smoothly within your Databricks workspace. Here’s how it works:

- Install the HiddenLayer CLI: The first step is to install the HiddenLayer CLI on your system. Running this installation will set up the necessary Python notebooks in your Databricks workspace, where the HiddenLayer Model Scanner will run.

- Configure the Unity Catalog Schema: During the installation, you will specify the catalogs and schemas that will be used for model scanning. Once configured, the integration will automatically scan new versions of models registered in those schemas.

- Automated Scanning: A monitoring notebook called hl_monitor_models runs on a scheduled basis. It checks for newly registered model versions in the configured schemas. If a new version is found, another notebook, hl_scan_model, sends the model to HiddenLayer for scanning.

- Reviewing Scan Results After scanning, the results are added to Unity Catalog as model tags. These tags include the scan status (pending, done, or failed) and a threat level (safe, low, medium, high, or critical). The full detection report is also accessible in the HiddenLayer Console. This allows teams to evaluate risk without needing to switch between systems.

Why This Workflow Works

This integration helps your team stay secure while maintaining the speed and flexibility of modern machine learning development.

- No Process Changes for Data Scientists

Teams continue working as usual. Model security is handled in the background. - Real-Time Security Coverage

Every new model version is scanned automatically, providing continuous protection. - Centralized Visibility

Scan results are stored directly in Unity Catalog and attached to each model version, making them easy to access, track, and audit. - Seamless CI/CD Compatibility

The system aligns with existing automation and governance workflows.

Final Thoughts

Model security should be a core part of your machine learning operations. By integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog, you gain a secure, automated process that protects your models from potential threats.

This approach improves governance, reduces risk, and allows your data science teams to keep working without interruptions. Whether you’re new to HiddenLayer or already a user, this integration with Databricks Unity Catalog is a valuable addition to your machine learning pipeline. Get started today and enhance the security of your ML models with ease.

Financial Case Study: AI Red Teaming

Global Financial Services Company Uses Hiddenlayer To Minimize Customer Experience Issues While Combating Fraud

Introduction

In a rapidly evolving financial landscape, the integrity of AI-driven fraud detection systems is paramount. This case study explores how a leading global financial services company partnered with HiddenLayer to fortify its machine-learning models against potential adversarial threats. With over 50 million users and billions of transactions annually, the company faced the dual challenge of maintaining an optimal customer experience while combating sophisticated fraud. HiddenLayer’s AI Red Teaming initiative was crucial in identifying vulnerabilities and enhancing the security of their AI models, ensuring robust fraud detection without compromising user satisfaction. This effort aligns with broader AI risk management strategies, emphasizing the importance of proactively identifying and mitigating threats before they materialize.

Company Overview

A financial services company engaged the HiddenLayer Professional Services team to conduct a red team evaluation of machine learning models used to detect and intercept fraud during financial transactions. The primary purpose of the assessment was to identify weaknesses in their AI model classifications that adversaries could exploit to conduct fraudulent activities on the platform without triggering detection, resulting in millions of dollars of potential losses annually.

Challenges

Hitting the Target: Ensuring an Optimal Customer Experience and Lowering Losses Amidst Rising Fraud Risks

With over 50 million users and facilitating more than 5 billion transactions annually, our customer grappled with the ongoing challenge of minimizing customer experience issues while simultaneously combating fraud. The delicate balance required cutting-edge AI and ML models to detect and intercept fraudulent transactions effectively. With these models at the core of their transaction operations, their

commitment to stellar customer experiences and the need for advanced security for AI led them to engage with HiddenLayer for a comprehensive red teaming initiative.

Discovery And Selection Of Hiddenlayer

An existing customer referred the customer to HiddenLayer, who recognized our deep domain expertise in cyber and data science and our experience in automated adversarial attack tools. Additionally, HiddenLayer’s flexible pricing model aligned with the customer’s needs, making HiddenLayer the clear choice for their red teaming endeavor.

Key Selection Criteria

- Deep Expertise: Proficiency across cyber and data science modalities.

- Adversarial Attack Experience: Experience in detecting and mitigating automated adversarial attack tools.

- Flexible Pricing: The pricing model offered the flexibility sought for the client’s unique requirements.

Objectives of AI Red Teaming

- Identify Vulnerable Features: Pinpoint features within the models are susceptible to an attacker’s influence, which could potentially substantially impact classification outcomes trending towards legitimacy.

- Create Adversarial Examples: Develop adversarial examples by modifying the fewest features in inputs classified as fraudulent, transitioning the classification from fraudulent to legitimate.

- Improve Model Classification: Identify areas for improvement within the target models to enhance the accuracy of classifying fraudulent activities.

Hiddenlayer’s Mitigation

Alongside existing security controls, the introduction of inference-time monitoring for model inputs and outputs to detect targeted attacks against the models allowed the ability to flag and block suspected adversarial abuse.

HiddenLayer’s AI Security (AISec) Platform, which includes AI Detection and Response for Gen AI (AIDR), provides real-time, scalable, and unobtrusive inference-time monitoring for all model types. AIDR can be used to audit all existing models for adversarial abuse and ongoing prevention of abuse. AIDR does not require access to the customer’s data or models, as all detections are performed using vectorized inputs and outputs.

AIDR provides protection against common adversarial techniques, including model extraction/theft, tampering, data poisoning/model injection, and inference.

Impact Of Hiddenlayer

The core purpose of HiddenLayer’s AI Red Teaming Assessment was to uncover weaknesses in model classification that adversaries could exploit for fraudulent activities without triggering detection. Now armed with a prioritized list of identified exploits, our client can channel their invaluable resources, involving data science and cyber teams, towards mitigation and remediation efforts with maximum impact. The result is an enhanced security posture for the entire platform without introducing additional friction for internal or external customers.

HiddenLayer’s product has proven instrumental in fortifying our defenses, allowing us to address vulnerabilities effectively and elevate our overall security stance while maintaining a seamless experience for our users.

Learn More

To better understand AI Red Teaming, read A Guide to AI Read Teaming and join our webinar on July 17th.

Understand AI Security, Clearly Defined

Explore our glossary to get clear, practical definitions of the terms shaping AI security, governance, and risk management.