Advancing the Science of AI Security

The HiddenLayer AI Security Research team uncovers vulnerabilities, develops defenses, and shapes global standards to ensure AI remains secure, trustworthy, and resilient.

Turning Discovery Into Defense

Our mission is to identify and neutralize emerging AI threats before they impact the world. The HiddenLayer AI Security Research team investigates adversarial techniques, supply chain compromises, and agentic AI risks, transforming findings into actionable security advancements that power the HiddenLayer AI Security Platform and inform global policy.

Our AI Security Research Team

HiddenLayer’s research team combines offensive security experience, academic rigor, and a deep understanding of machine learning systems.

Kenneth Yeung

Senior AI Security Researcher

.svg)

Conor McCauley

Adversarial Machine Learning Researcher

.svg)

Jim Simpson

Principal Intel Analyst

.svg)

Jason Martin

Director, Adversarial Research

.svg)

Andrew Davis

Chief Data Scientist

.svg)

Marta Janus

Principal Security Researcher

.svg)

%201.png)

Eoin Wickens

Director of Threat Intelligence

.svg)

Kieran Evans

Principal Security Researcher

.svg)

Ryan Tracey

Principal Security Researcher

.svg)

%201%20(1).png)

Kasimir Schulz

Director, Security Research

.svg)

Our Impact by the Numbers

Quantifying the reach and influence of HiddenLayer’s AI Security Research.

Reduction in exposure to AI exploits

Disclosed through our security research

Issued patents

Latest Discoveries

Explore HiddenLayer’s latest vulnerability disclosures, advisories, and technical insights advancing the science of AI security.

Synaptic Adversarial Intelligence Introduction

It is my great pleasure to announce the formation of HiddenLayer’s Synaptic Adversarial Intelligence team, SAI.

First and foremost, our team of multidisciplinary cyber security experts and data scientists are on a mission to increase general awareness surrounding the threats facing machine learning and artificial intelligence systems. Through education, we aim to help data scientists, MLDevOps teams and cyber security practitioners better evaluate the vulnerabilities and risks associated with ML/AI, ultimately leading to more security conscious implementations and deployments.

Alongside our commitment to increase awareness of ML security, we will also actively assist in the development of countermeasures to thwart ML adversaries through the monitoring of deployed models, as well as providing mechanisms to allow defenders to respond to attacks.

Our team of experts have many decades of experience in cyber security, with backgrounds in malware detection, threat intelligence, reverse engineering, incident response, digital forensics and adversarial machine learning. Leveraging our diverse skill sets, we will also be developing open-source attack simulation tooling, talking about attacks in blogs and at conferences and offering our expert advice to anyone who will listen!

It is a very exciting time for machine learning security, or MLSecOps, as it has come to be known. Despite the relative infancy of this emerging branch of cyber security, there has been tremendous effort from several organizations, such as MITRE and NIST, to better understand and quantify the risks associated with ML/AI today. We very much look forward to working alongside these organizations, and other established industry leaders, to help broaden the pool of knowledge, define threat models, drive policy and regulation, and most critically, prevent attacks.

Keep an eye on our blog in the coming weeks and months, as we share our thoughts and insights into the wonderful world of adversarial machine learning, and provide insights to empower attackers and defenders alike.

Happy learning!

–

Tom Bonner

Sr. Director of Adversarial Machine Learning Research, HiddenLayer Inc.

Sleeping With One AI Open

AI - Trending Now

Artificial Intelligence (AI) is the hot topic of the 2020s - just as “email” used to be in the 80s, “Word Wide Web” in the 90s, “cloud computing” in the 00s, and “Internet-of-Things” more recently. However, it’s much more than just a buzzword, and like each of its predecessors, the technology behind it is rapidly transforming our world and everyday life.

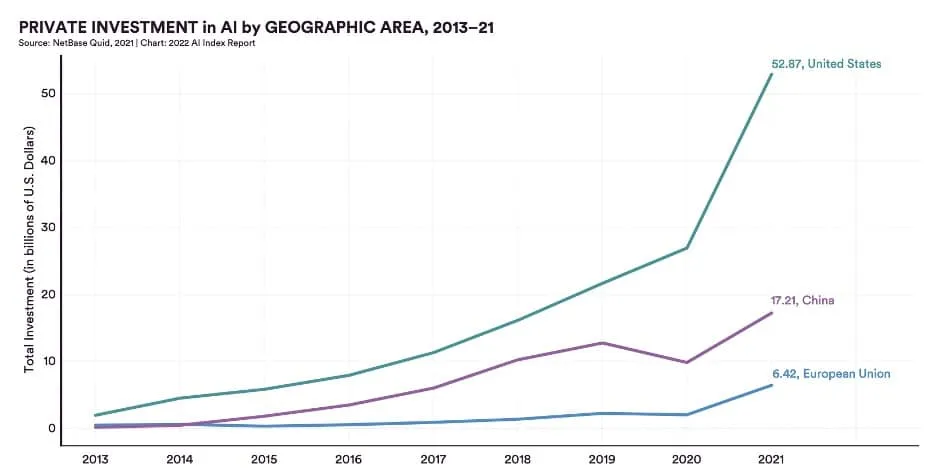

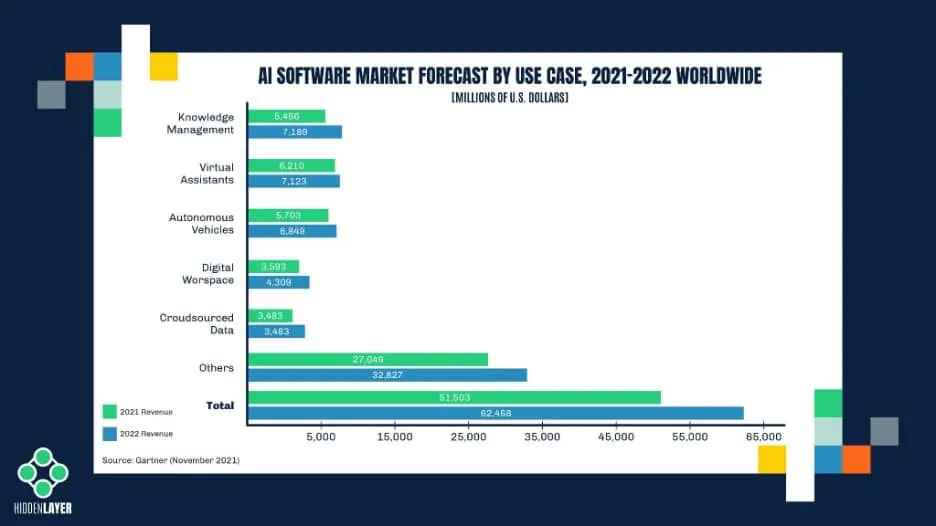

The underlying technology, called Machine Learning (ML), is all around us - in the apps we use on our personal devices, in our homes, cars, banks, factories, and hospitals. ML attracts billions of dollars of investments each year and generates billions more in revenue. Most people are unaware that many aspects of our lives depend on the decisions made by AI, or more specifically, some unintentionally obscure machine learning models that power those AI solutions. Nowadays, it’s ML that decides whether you get a mortgage or how much you will pay for your health insurance; even unlocking your phone relies on an effective ML model (we’ll explain this term in a bit more detail shortly).

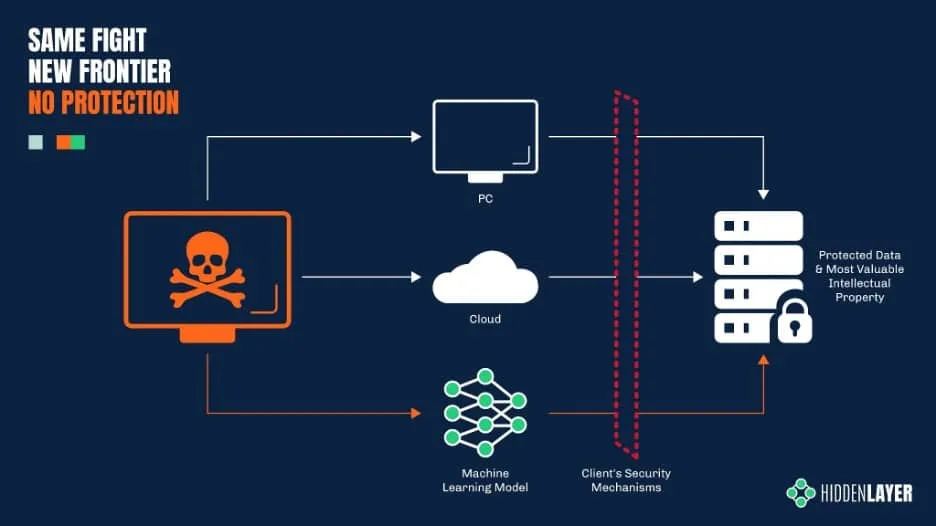

Whether you realize it or not, machine learning is gaining rapid adoption across several sectors, making it a very enticing target for cyber adversaries. We’ve seen this pattern before with various races to implement new technology as security lags behind. The rise of the internet led to the proliferation of malware, email made every employee a potential target for phishing attacks, the cloud dangles customer data out in the open, and your smartphone bundles all your personal information in one device waiting to be compromised. ML is sadly not an exception and is already being abused today.

To understand how cyber-criminals can hack a machine learning model - and why! - we first need to take a very brief look at how these models work.

A Glimpse Under the Hood

Have you ever wondered how Alexa can understand (almost) everything you ask her or how a Tesla car keeps itself from veering off the road? While it may appear like magic, there is a tried and true science under the hood, one that involves a great deal of math.

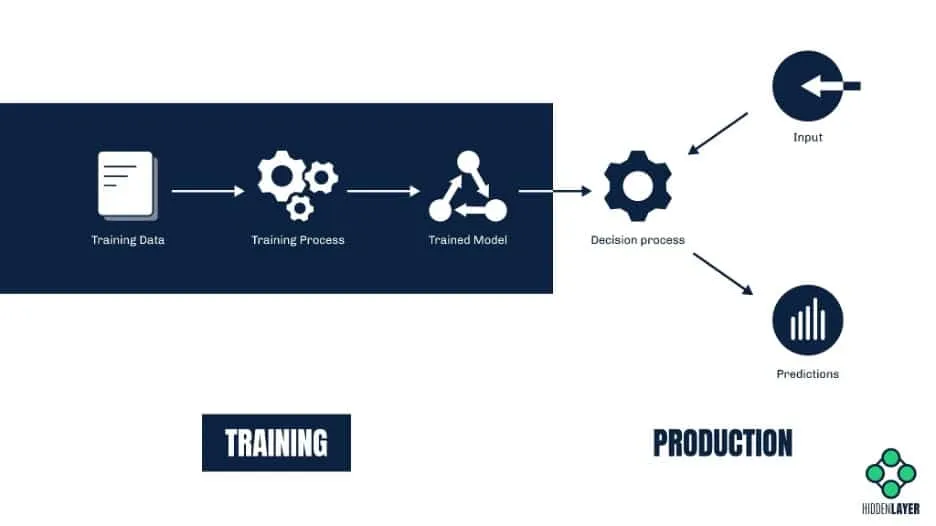

At the core of any AI-powered solution lies a decision-making system, which we call a machine learning model. Despite being a product of mathematical algorithms, this model works much like a human brain - it analyzes the input (such as a picture, a sound file, or a spreadsheet with financial data) and makes a prediction based on the information it has learned in the past.

The phase in which the model “acquires” its knowledge is called the training phase. During training, the model examines a vast amount of data and builds correlations. These correlations enable the model to interpret new, previously unseen input and make some sort of prediction about it.

Let’s take an image recognition system as an example. A model designed to recognize pictures of cats is trained by running a large number of images through a set of mathematical functions. These images will include both depictions of cats (labeled as “cat”) and depictions of other animals (labeled as - you guessed it - “not_cat”). After the training phase computations are completed, the model should be able to correctly classify a previously unseen image as either “cat” or “not_cat” with a high degree of accuracy. The system described is known as a simple binary classifier (as it can make one of two choices), but if we were to extend the system to also detect various breeds of cats and dogs, then it would be called a multiclass classifier.

Machine learning is not just about classification. There are different types of models that suit various purposes. A price estimation system, for example, will use a model that outputs real-value predictions, while an in-game AI will involve a model that essentially makes decisions. While this is beyond the scope of this article, you can learn more about ML models here.

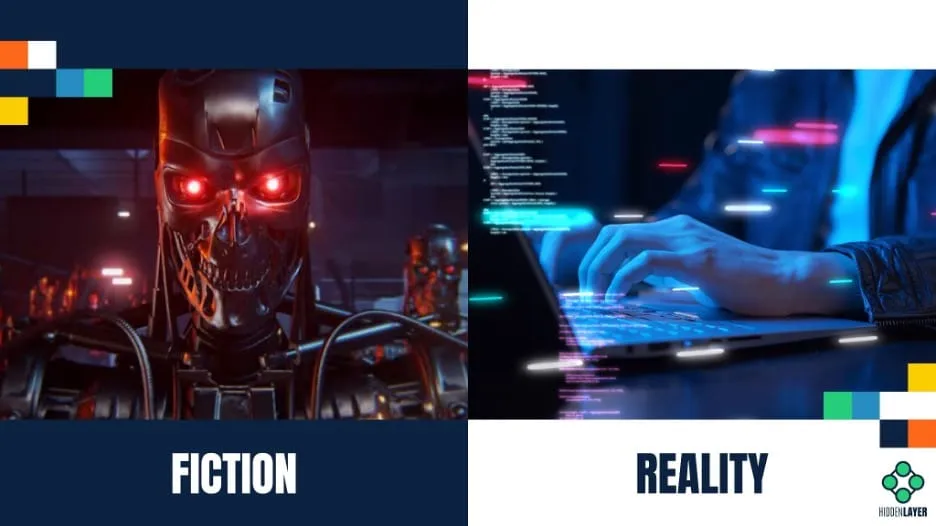

Walking On Thin Ice

When we talk about artificial intelligence in terms of security risks, we usually envisage some super-smart AI posing a threat to society. The topic is very enticing and has inspired countless dystopian stories. However, as things stand, we are not quite close yet to inventing a truly conscious AI; the recent claims that Google’s LaMDA bot has reached sentience are frankly absurd. Instead of focusing on sci-fi scenarios where AI turns against humans, we should pay much more attention to the genuine risk that we’re facing today - the risk of humans attacking AI.

Many products (such as web applications, mobile apps, or embedded devices) share their entire machine learning model with the end-user. Even if the model itself is deployed in the cloud and is not directly accessible, the consumer still must be able to query it, i.e., upload their inputs and obtain the model’s predictions. This aspect alone makes ML solutions vulnerable to a wide range of abuse.

Numerous academic research studies have proven that machine learning is susceptible to attack. However, awareness of the security risks faced by ML has barely spread outside of academia, and stopping attacks is not yet within the scope of today’s cyber security products. Meanwhile, cyber-criminals are already getting their hands dirty conducting novel attacks to abuse ML for their own gain.

Things invisible to the naked AI

While it may sound like quite a niche, adversarial machine learning (known more colloquially as “model hacking”) is a deceptively broad field covering many different types of attacks on ML systems. Some of them may seem familiar - like distantly related cousins of those traditional cyber attacks that you’re used to hearing about, such as trojans and backdoors.

But why would anyone want to attack an ML model? The reasons are typically the same as any other kind of cyber attack, the most relevant being: financial gain, getting a competitive advantage or hurting competitors, manipulating public opinion, and bypassing security solutions.

In broad terms, an ML model can be attacked in three different ways:

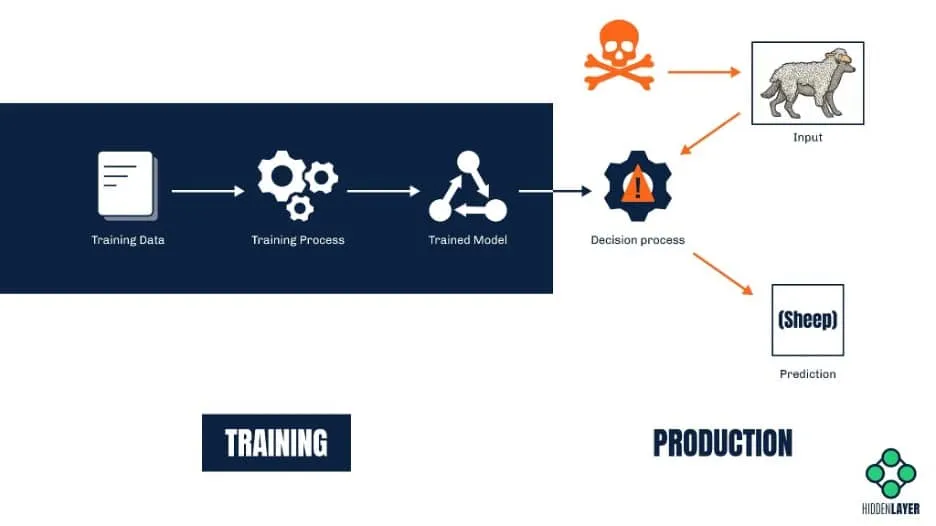

- It can be fooled into making a wrong prediction (e.g., to bypass malware detection)

- It can be altered (e.g., to make it biased, inaccurate, or even malicious in nature)

- It can be replicated (in other words, stolen)

Fooling the model (a.k.a. evasion attacks)

Not many might be aware, but evasion attacks are already widely employed by cyber-criminals to bypass various security solutions - and have been used for quite a while. Consider ML-based spam filters designed to predict which emails are junk based on the occurrences of specific words in them. Spammers quickly found their way around these filters by adding words associated with legitimate messages to their junk emails. In this way, they were able to fool the model into making the wrong conclusion.

Of course, most modern machine learning solutions are way more complex and robust than those early spam filters. Nevertheless, with the ability to query a model and read its predictions, attackers can easily craft inputs that will produce an incorrect prediction or classification. The difference between a correctly classified sample and the one that triggers misclassification is often invisible to the human eye.

Besides bypassing anti-spam / anti-malware solutions, evasion attacks can also be used to fool visual recognition systems. For example, a road sign with a specially crafted sticker on it might be misidentified by the ML system on-board a self-driving car. Such an attack could cause a car to fail to identify a stop sign and inadvertently speed up instead of slowing down. In a similar vein, attackers wanting to bypass a facial recognition system might design a special pair of sunglasses that will make the wearer invisible to the system. The possibilities are endless, and some can have potentially lethal consequences.

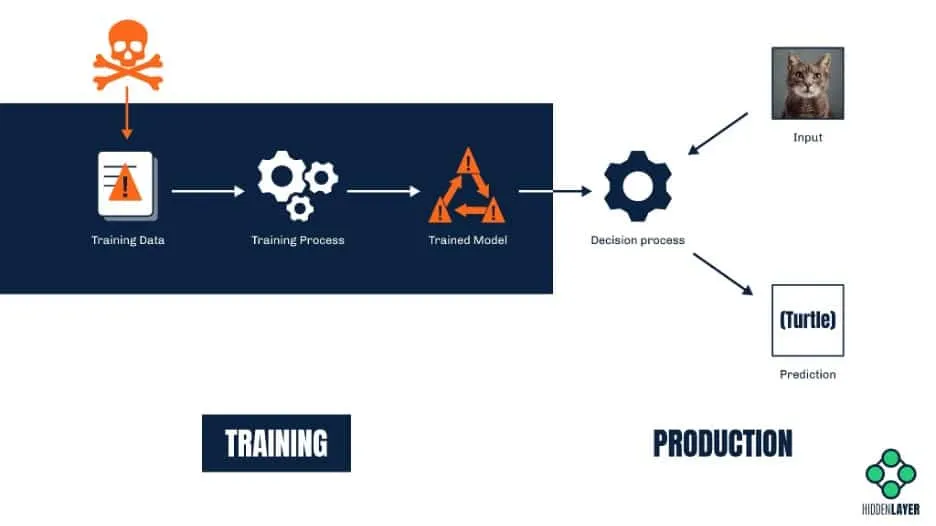

Altering the model (a.k.a. poisoning attacks)

While evasion attacks are about altering the input to make it undetectable (or indeed mistaken for something else), poisoning attacks are about altering the model itself. One way to do so is by training the model on inaccurate information. A great example here would be an online chatbot that is continuously trained on the user-provided portion of the conversation. A malicious user can interact with the bot in a certain way to introduce bias. Remember Tay, the infamous Microsoft Twitter bot whose responses quickly became rude and racist? Although it was a result of (mostly) unintended trolling, it is a prime case study for a crude crowd-sourced poisoning attempt.

ML systems that rely on online learning (such as recommendation systems, text auto-complete tools, and voice recognition solutions, to name but a few) are especially vulnerable to poisoning because the input they are trained on comes from untrusted sources. A model is only as good as its training data (and associated labels), and predictions from a model trained on inaccurate data will always be biased or incorrect.

Another much more sophisticated attack that relies on altering the model involves injecting a so-called “backdoor” into the model. A backdoor, in this context, is some secret functionality that will make the ML model selectively biased on-command. It requires both access to the model and a great deal of skill but might prove a very lucrative business. For example, ambitious attackers could backdoor a mortgage approval model. They could then sell a service to non-eligible applicants to help get their applications approved. Similarly, suppliers of biometric access control or image recognition systems could tamper with models they supply to include backdoors, allowing unauthorized access to buildings for specific people or even hiding people from video surveillance systems altogether.

Stealing the model

Imagine spending vast amounts of time and money on developing a complex machine learning system that predicts market trends with surprising accuracy. Now imagine a competitor who emerges from nowhere and has an equally accurate system in a matter of days. Sounds suspicious, doesn’t it?

As it turns out, ML models are just as susceptible to theft as any other technology. Even if the model is not bundled with an application or readily available for download (as is often the case), more savvy attackers can attempt to replicate it by spamming the ML system with a vast amount of specially-crafted queries and recording the output, finally creating their own model based on these results. This process gets even easier if the data the ML was trained on is also accessible to attackers. Such a copycat model can often perform just as well as the original, which means you may lose your competitive advantage in the market that costs considerable time, effort, and money to establish.

Safeguarding AI - Without a T-800

Unlike the aforementioned world-changing technologies, machine learning is still largely overlooked as an attack vector, and a comprehensive out-of-the-box security solution has yet to be released to protect it. However, there are a few simple steps that can help to minimize the risks that your precious AI-powered technology might be facing.

First of all, knowledge is the key. Being aware of the danger puts you in a position where you can start thinking of defensive measures. The better you understand your vulnerabilities, the potential threats you face, and the attacker behind them, the more effective your defenses will be. MITRE’s recently released knowledgebase called Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) is an excellent place to begin, and keep an eye on our research space, too, as we aim to make the knowledge surrounding machine learning attacks more accessible.

Don’t forget to keep your stakeholders educated and informed. Data scientists, ML engineers, developers, project managers, and even C-level management must be aware of ML security, albeit to different degrees. It is much easier to protect a robust system designed, developed, and maintained with security in mind - and by security-conscious people - than consider security as an afterthought.

Beware of oversharing. Carefully assess which parts of your ML system and data need to be exposed to the customer. Share only as much information as necessary for the system to function efficiently.

Finally, help us help you! At HiddenLayer, we are not only spreading the word about ML security, but we are also in the process of developing the first Machine Learning Detection and Response solution. Don’t hesitate to reach out if you wish to book a demo, collaborate, discuss, brainstorm, or simply connect. After all, we’re stronger together!

If you wish to dive deeper into the inner workings of attacks against ML, watch out for our next blog, in which we will focus on the Tactics and Techniques of Adversarial ML from a more technical perspective. In the meantime, you can also learn a thing or two about the ML adversary lifecycle.

About HiddenLayer

HiddenLayer helps enterprises safeguard the machine learning models behind their most important products with a comprehensive security platform. Only HiddenLayer offers turnkey AI/ML security that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Founded in March of 2022 by experienced security and ML professionals, HiddenLayer is based in Austin, Texas, and is backed by cybersecurity investment specialist firm Ten Eleven Ventures. For more information, visit www.hiddenlayer.com and follow us on LinkedIn or Twitter.

Adversarial Machine Learning: A New Frontier

Beware the Adversarial Pickle

Summary

In this blog, we look at the increasing societal dependence on machine learning and its pervasiveness throughout every facet of our lives. We then set our sights on the various methodologies attackers use to attack and exploit this new frontier. We explore what an adversarial machine learning attack is from a high level, the potential consequences thereof and why we believe that the time has come to look to safeguard our models, and, by virtue, the critical services that rely on them.

Introduction

Over the last decade, Machine Learning (ML) has become increasingly more commonplace, transcending the digital world into that of the physical. While some technologies are practically synonymous with ML (like home voice assistants and self-driving cars), it isn’t always as noticeable when big buzzwords and flashy marketing jargon haven’t been used. Here is a non-exhaustive list of common machine learning use cases:

- Recommendation algorithms for streaming services and social networks

- Facial recognition/biometrics such as device unlocking

- Targeted ads tailored to specific demographics

- Anti-malware & anti-spam security solutions

- Automated customer support agents and chatbots

- Manufacturing, quality control, and warehouse logistics

- Bank loan, mortgage, or insurance application approval

- Financial fraud detection

- Medical diagnosis

- And many more!

Pretty incredible, right? But it’s not just Fortune 500 companies or sprawling multinationals using ML to perform critical business functions. With the ease of access to vast amounts of data, open-source libraries, and readily-available learning material, ML has been brought firmly into the hands of the people.

It's a game of give and take

Libraries such as SciKit, Numpy, TensorFlow, PyTorch, and CreateML have made it easier than ever to create ML models that solve complex problems, including tasks that only a few years ago could have been done solely by humans - and many, at that. Creating and implementing a model is now so frictionless that you can go from zero to hero in hours. However, as with most sprawling software ecosystems, as the barrier for entry lowers, the barrier to secure it rises.

As is often the case with significant technological advancements, we create, design, and build in a flurry, then gradually realize how the technology can be misused, abused, or attacked. With how easily ML can be harnessed and the depth to which the technology has been woven into our lives, we have to ask ourselves a few tricky questions:

- Could someone attack, disrupt or manipulate critical ML models?

- What are the potential consequences of an attack on an ML model?

- Are there any security controls in place to protect against attack?

And perhaps most crucially:

- Could you tell if you were under attack?

Depending on the criticality of the model and how an adversary could attack it, the consequences of an attack can range from unpleasant to catastrophic. As we increasingly rely on ML-powered solutions, the attacks against ML models - known broadly as adversarial machine learning (AML) - are becoming more pervasive now than ever.

What is an Adversarial Machine Learning attack?

An adversarial machine learning attack can take many forms, from a single pixel placed within an image to produce a wrong classification to manipulating a stock trading model through data poisoning or inference for financial gain. Adversarial ML attacks do not resemble your typical malware infection. At least, not yet - we’ll explore this later!

Image source: https://github.com/Hyperparticle/one-pixel-attack-keras

Adversarial ML is a relatively new, cutting-edge frontier of cybersecurity that is still primarily in its infancy. Research into novel attacks that produce erroneous behavior in models and can steal intellectual property is only on the rise. An article on the technology news site VentureBeat states that in 2014 there were zero papers regarding adversarial ML on the research sharing repository Arxiv.org. As of 2020, they record this number as an approximate 1,100. Today, there are over 2,000.

The recently formed MITRE - ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems), made by the creators of MITRE ATT&CK, documents several case studies of adversarial attacks on ML production systems, none of which have been performed in controlled settings. It's worth noting that there is no regulatory requirement to disclose adversarial ML attacks at the time of writing, meaning that the actual number, while almost certainly higher, may remain a mystery. A publication that deserves an honorable mention is the 2019 draft of ‘A Taxonomy and Terminology of Adversarial Machine Learning’ by the National Institute of Standards and Technology (NIST). The content of which has proven invaluable in so far as to create a common language and conceptual framework to help define the adversarial machine learning problem space.

It's not just the algorithm

Since its inception, AML research has primarily focused on model/algorithm-centric attacks such as data poisoning, inference, and evasion - to name but a few. However, the attack surface has become even wider still. Instead of targeting the underlying algorithm, attackers are instead choosing to target how models are stored on disk, in-memory, and how they’re deployed and distributed. While ML is often touted as a transcendent technology that could almost be beyond the reach of us mere mortals, it’s still bound by the same constraints as any other piece of software, meaning many similar vulnerabilities can be found and exploited. However, these are often outside the purview of existing security solutions, such as anti-virus and EDR.

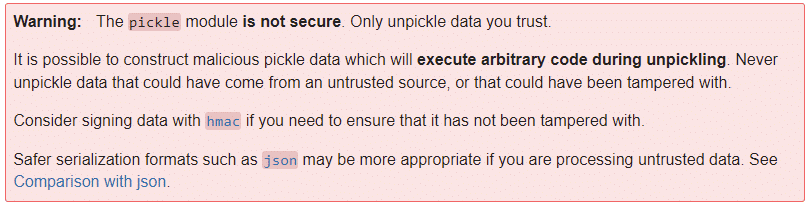

To illustrate this point, we need not look any further than the insecurity and abuse of the Pickle file format. For the uninitiated, Pickle is a serialized storage format which has become almost ubiquitous with the storage and sharing of pre-trained machine learning models. Researchers from TrailOfBits show how the format can execute malicious code as soon as a model is loaded using their open source tool called ‘Fickling’. This significant insecurity has been acknowledged since at least 2011, as per the Pickle documentation:

Considering that this has been a known issue for over a decade, coupled with the continued use and ubiquity of this serialization format, it makes the thought of an adversarial pickle a scary one.

Cost and consequence

The widespread adoption of ML, combined with the increasing level of responsibility and trust, dramatically increases the potential attack surface for adversarial attacks and possible consequences. Businesses across every vertical depend on machine learning for their critical business functions, which has led the machine learning market to an approximate valuation of over $100 billion, with estimates of up to multiple trillion by the year 2030. These figures represent an ever enticing target for cybercriminals and espionage alike.

The implications of an adversarial attack vary depending on the application of the model. For example, a model that classifies types of iris flowers will have a different threat model than a model that predicts heart disease based on a series of historical indicators. However, even with models that don't have a significant risk of ‘going wrong’, the model(s) you deploy may be your company's crown jewels. That same iris flower classifier may be your competitive advantage in the market. If it was to be stolen, you risk losing your IP and your advantage along with it. While not a fully comprehensive breakdown, the following image helps to paint a picture of the potential ramifications of an adversarial attack on an ML model:

But why now?

We've all seen news articles warning of impending doom caused by machine learning and artificial intelligence. It's easy to get lost in fear-mongering and can prove difficult to separate the alarmist from the pragmatist. Even reading this article, it’s easy to look on with skepticism. But we're not talking about the potential consequences of ‘the singularity’ here - HAL, Skynet, or the Cylons chasing a particular Battlestar will all agree that we're not quite there yet. We are talking about ensuring that security is taken into active consideration in the development, deployment, and execution of ML models, especially given the level of trust placed upon them.

Just as ML transitioned from a field of conceptual research into a widely accessible and established sector, it is now transitioning into a new phase, one where security must be a major focal point.

Conclusions

Machine learning has reached another evolutionary inflection point, where it has become more accessible than ever and no longer requires an advanced background in hard data science/statistics. As ML models become easier to deploy, use, and more commonplace within our programming toolkit, there is more room for security oversights and vulnerabilities to be introduced.

As a result, AML attacks are becoming steadily more prevalent. The amount of academic and industry research in this area has been increasing, with more attacks choosing not to focus on the model itself but on how it is deployed and implemented. Such attacks are a rising threat that has largely gone under the radar.

Even though AML is at the cutting edge of modern cybersecurity and may not yet be as household a name as your neighborhood ransomware group, we have to ask the question: when is the best time to defend yourself from an attack, before or after it’s happened?

About HiddenLayer

HiddenLayer helps enterprises safeguard the machine learning models behind their most important products with a comprehensive security platform. Only HiddenLayer offers turnkey AI/ML security that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Founded in March of 2022 by experienced security and ML professionals, HiddenLayer is based in Austin, Texas, and is backed by cybersecurity investment specialist firm Ten Eleven Ventures. For more information, visit www.hiddenlayer.com and follow us on LinkedIn or Twitter.

In the News

HiddenLayer’s research is shaping global conversations about AI security and trust.

HiddenLayer Selected as Awardee on $151B Missile Defense Agency SHIELD IDIQ Supporting the Golden Dome Initiative

Underpinning HiddenLayer’s unique solution for the DoD and USIC is HiddenLayer’s Airgapped AI Security Platform, the first solution designed to protect AI models and development processes in fully classified, disconnected environments. Deployed locally within customer-controlled environments, the platform supports strict US Federal security requirements while delivering enterprise-ready detection, scanning, and response capabilities essential for national security missions.

Austin, TX – December 23, 2025 – HiddenLayer, the leading provider of Security for AI, today announced it has been selected as an awardee on the Missile Defense Agency’s (MDA) Scalable Homeland Innovative Enterprise Layered Defense (SHIELD) multiple-award, indefinite-delivery/indefinite-quantity (IDIQ) contract. The SHIELD IDIQ has a ceiling value of $151 billion and serves as a core acquisition vehicle supporting the Department of Defense’s Golden Dome initiative to rapidly deliver innovative capabilities to the warfighter.

The program enables MDA and its mission partners to accelerate the deployment of advanced technologies with increased speed, flexibility, and agility. HiddenLayer was selected based on its successful past performance with ongoing US Federal contracts and projects with the Department of Defence (DoD) and United States Intelligence Community (USIC). “This award reflects the Department of Defense’s recognition that securing AI systems, particularly in highly-classified environments is now mission-critical,” said Chris “Tito” Sestito, CEO and Co-founder of HiddenLayer. “As AI becomes increasingly central to missile defense, command and control, and decision-support systems, securing these capabilities is essential. HiddenLayer’s technology enables defense organizations to deploy and operate AI with confidence in the most sensitive operational environments.”

Underpinning HiddenLayer’s unique solution for the DoD and USIC is HiddenLayer’s Airgapped AI Security Platform, the first solution designed to protect AI models and development processes in fully classified, disconnected environments. Deployed locally within customer-controlled environments, the platform supports strict US Federal security requirements while delivering enterprise-ready detection, scanning, and response capabilities essential for national security missions.

HiddenLayer’s Airgapped AI Security Platform delivers comprehensive protection across the AI lifecycle, including:

- Comprehensive Security for Agentic, Generative, and Predictive AI Applications: Advanced AI discovery, supply chain security, testing, and runtime defense.

- Complete Data Isolation: Sensitive data remains within the customer environment and cannot be accessed by HiddenLayer or third parties unless explicitly shared.

- Compliance Readiness: Designed to support stringent federal security and classification requirements.

- Reduced Attack Surface: Minimizes exposure to external threats by limiting unnecessary external dependencies.

“By operating in fully disconnected environments, the Airgapped AI Security Platform provides the peace of mind that comes with complete control,” continued Sestito. “This release is a milestone for advancing AI security where it matters most: government, defense, and other mission-critical use cases.”

The SHIELD IDIQ supports a broad range of mission areas and allows MDA to rapidly issue task orders to qualified industry partners, accelerating innovation in support of the Golden Dome initiative’s layered missile defense architecture.

Performance under the contract will occur at locations designated by the Missile Defense Agency and its mission partners.

About HiddenLayer

HiddenLayer, a Gartner-recognized Cool Vendor for AI Security, is the leading provider of Security for AI. Its security platform helps enterprises safeguard their agentic, generative, and predictive AI applications. HiddenLayer is the only company to offer turnkey security for AI that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Backed by patented technology and industry-leading adversarial AI research, HiddenLayer’s platform delivers supply chain security, runtime defense, security posture management, and automated red teaming.

Contact

SutherlandGold for HiddenLayer

hiddenlayer@sutherlandgold.com

HiddenLayer Announces AWS GenAI Integrations, AI Attack Simulation Launch, and Platform Enhancements to Secure Bedrock and AgentCore Deployments

As organizations rapidly adopt generative AI, they face increasing risks of prompt injection, data leakage, and model misuse. HiddenLayer’s security technology, built on AWS, helps enterprises address these risks while maintaining speed and innovation.

AUSTIN, TX — December 1, 2025 — HiddenLayer, the leading AI security platform for agentic, generative, and predictive AI applications, today announced expanded integrations with Amazon Web Services (AWS) Generative AI offerings and a major platform update debuting at AWS re:Invent 2025. HiddenLayer offers additional security features for enterprises using generative AI on AWS, complementing existing protections for models, applications, and agents running on Amazon Bedrock, Amazon Bedrock AgentCore, Amazon SageMaker, and SageMaker Model Serving Endpoints.

As organizations rapidly adopt generative AI, they face increasing risks of prompt injection, data leakage, and model misuse. HiddenLayer’s security technology, built on AWS, helps enterprises address these risks while maintaining speed and innovation.

“As organizations embrace generative AI to power innovation, they also inherit a new class of risks unique to these systems,” said Chris Sestito, CEO and Co-Founder of HiddenLayer. “Working with AWS, we’re ensuring customers can innovate safely, bringing trust, transparency, and resilience to every layer of their AI stack.”

Built on AWS to Accelerate Secure AI Innovation

HiddenLayer’s AI Security Platform and integrations are available in AWS Marketplace, offering native support for Amazon Bedrock and Amazon SageMaker. The company complements AWS infrastructure security by providing AI-specific threat detection, identifying risks within model inference and agent cognition that traditional tools overlook.

Through automated security gates, continuous compliance validation, and real-time threat blocking, HiddenLayer enables developers to maintain velocity while giving security teams confidence and auditable governance for AI deployments.

Alongside these integrations, HiddenLayer is introducing a complete platform redesign and the launches of a new AI Discovery module and an enhanced AI Attack Simulation module, further strengthening its end-to-end AI Security Platform that protects agentic, generative, and predictive AI systems.

Key enhancements include:

- AI Discovery: Identifies AI assets within technical environments to build AI asset inventories

- AI Attack Simulation: Automates adversarial testing and Red Teaming to identify vulnerabilities before deployment.

- Complete UI/UX Revamp: Simplified sidebar navigation and reorganized settings for faster workflows across AI Discovery, AI Supply Chain Security, AI Attack Simulation, and AI Runtime Security.

- Enhanced Analytics: Filterable and exportable data tables, with new module-level graphs and charts.

- Security Dashboard Overview: Unified view of AI posture, detections, and compliance trends.

- Learning Center: In-platform documentation and tutorials, with future guided walkthroughs.

HiddenLayer will demonstrate these capabilities live at AWS re:Invent 2025, December 1–5 in Las Vegas.

To learn more or request a demo, visit https://hiddenlayer.com/reinvent2025/.

About HiddenLayer

HiddenLayer, a Gartner-recognized Cool Vendor for AI Security, is the leading provider of Security for AI. Its platform helps enterprises safeguard agentic, generative, and predictive AI applications without adding unnecessary complexity or requiring access to raw data and algorithms. Backed by patented technology and industry-leading adversarial AI research, HiddenLayer delivers supply chain security, runtime defense, posture management, and automated red teaming.

For more information, visit www.hiddenlayer.com.

Press Contact:

SutherlandGold for HiddenLayer

hiddenlayer@sutherlandgold.com

HiddenLayer Joins Databricks’ Data Intelligence Platform for Cybersecurity

On September 30, Databricks officially launched its <a href="https://www.databricks.com/blog/transforming-cybersecurity-data-intelligence?utm_source=linkedin&utm_medium=organic-social">Data Intelligence Platform for Cybersecurity</a>, marking a significant step in unifying data, AI, and security under one roof. At HiddenLayer, we’re proud to be part of this new data intelligence platform, as it represents a significant milestone in the industry's direction.

On September 30, Databricks officially launched its Data Intelligence Platform for Cybersecurity, marking a significant step in unifying data, AI, and security under one roof. At HiddenLayer, we’re proud to be part of this new data intelligence platform, as it represents a significant milestone in the industry's direction.

Why Databricks’ Data Intelligence Platform for Cybersecurity Matters for AI Security

Cybersecurity and AI are now inseparable. Modern defenses rely heavily on machine learning models, but that also introduces new attack surfaces. Models can be compromised through adversarial inputs, data poisoning, or theft. These attacks can result in missed fraud detection, compliance failures, and disrupted operations.

Until now, data platforms and security tools have operated mainly in silos, creating complexity and risk.

The Databricks Data Intelligence Platform for Cybersecurity is a unified, AI-powered, and ecosystem-driven platform that empowers partners and customers to modernize security operations, accelerate innovation, and unlock new value at scale.

How HiddenLayer Secures AI Applications Inside Databricks

HiddenLayer adds the critical layer of security for AI models themselves. Our technology scans and monitors machine learning models for vulnerabilities, detects adversarial manipulation, and ensures models remain trustworthy throughout their lifecycle.

By integrating with Databricks Unity Catalog, we make AI application security seamless, auditable, and compliant with emerging governance requirements. This empowers organizations to demonstrate due diligence while accelerating the safe adoption of AI.

The Future of Secure AI Adoption with Databricks and HiddenLayer

The Databricks Data Intelligence Platform for Cybersecurity marks a turning point in how organizations must approach the intersection of AI, data, and defense. HiddenLayer ensures the AI applications at the heart of these systems remain safe, auditable, and resilient against attack.

As adversaries grow more sophisticated and regulators demand greater transparency, securing AI is an immediate necessity. By embedding HiddenLayer directly into the Databricks ecosystem, enterprises gain the assurance that they can innovate with AI while maintaining trust, compliance, and control.

In short, the future of cybersecurity will not be built solely on data or AI, but on the secure integration of both. Together, Databricks and HiddenLayer are making that future possible.

FAQ: Databricks and HiddenLayer AI Security

What is the Databricks Data Intelligence Platform for Cybersecurity?

The Databricks Data Intelligence Platform for Cybersecurity delivers the only unified, AI-powered, and ecosystem-driven platform that empowers partners and customers to modernize security operations, accelerate innovation, and unlock new value at scale.

Why is AI application security important?

AI applications and their underlying models can be attacked through adversarial inputs, data poisoning, or theft. Securing models reduces risks of fraud, compliance violations, and operational disruption.

How does HiddenLayer integrate with Databricks?

HiddenLayer integrates with Databricks Unity Catalog to scan models for vulnerabilities, monitor for adversarial manipulation, and ensure compliance with AI governance requirements.

Get all our Latest Research & Insights

Explore our glossary to get clear, practical definitions of the terms shaping AI security, governance, and risk management.

Thanks for your message!

We will reach back to you as soon as possible.