Advancing the Science of AI Security

The HiddenLayer AI Security Research team uncovers vulnerabilities, develops defenses, and shapes global standards to ensure AI remains secure, trustworthy, and resilient.

Turning Discovery Into Defense

Our mission is to identify and neutralize emerging AI threats before they impact the world. The HiddenLayer AI Security Research team investigates adversarial techniques, supply chain compromises, and agentic AI risks, transforming findings into actionable security advancements that power the HiddenLayer AI Security Platform and inform global policy.

Our AI Security Research Team

HiddenLayer’s research team combines offensive security experience, academic rigor, and a deep understanding of machine learning systems.

Kenneth Yeung

Senior AI Security Researcher

.svg)

Conor McCauley

Adversarial Machine Learning Researcher

.svg)

Jim Simpson

Principal Intel Analyst

.svg)

Jason Martin

Director, Adversarial Research

.svg)

Andrew Davis

Chief Data Scientist

.svg)

Marta Janus

Principal Security Researcher

.svg)

%201.png)

Eoin Wickens

Director of Threat Intelligence

.svg)

Kieran Evans

Principal Security Researcher

.svg)

Ryan Tracey

Principal Security Researcher

.svg)

%201%20(1).png)

Kasimir Schulz

Director, Security Research

.svg)

Our Impact by the Numbers

Quantifying the reach and influence of HiddenLayer’s AI Security Research.

Reduction in exposure to AI exploits

Disclosed through our security research

Issued patents

Latest Discoveries

Explore HiddenLayer’s latest vulnerability disclosures, advisories, and technical insights advancing the science of AI security.

Adversarial Machine Learning: A New Frontier

Beware the Adversarial Pickle

Summary

In this blog, we look at the increasing societal dependence on machine learning and its pervasiveness throughout every facet of our lives. We then set our sights on the various methodologies attackers use to attack and exploit this new frontier. We explore what an adversarial machine learning attack is from a high level, the potential consequences thereof and why we believe that the time has come to look to safeguard our models, and, by virtue, the critical services that rely on them.

Introduction

Over the last decade, Machine Learning (ML) has become increasingly more commonplace, transcending the digital world into that of the physical. While some technologies are practically synonymous with ML (like home voice assistants and self-driving cars), it isn’t always as noticeable when big buzzwords and flashy marketing jargon haven’t been used. Here is a non-exhaustive list of common machine learning use cases:

- Recommendation algorithms for streaming services and social networks

- Facial recognition/biometrics such as device unlocking

- Targeted ads tailored to specific demographics

- Anti-malware & anti-spam security solutions

- Automated customer support agents and chatbots

- Manufacturing, quality control, and warehouse logistics

- Bank loan, mortgage, or insurance application approval

- Financial fraud detection

- Medical diagnosis

- And many more!

Pretty incredible, right? But it’s not just Fortune 500 companies or sprawling multinationals using ML to perform critical business functions. With the ease of access to vast amounts of data, open-source libraries, and readily-available learning material, ML has been brought firmly into the hands of the people.

It's a game of give and take

Libraries such as SciKit, Numpy, TensorFlow, PyTorch, and CreateML have made it easier than ever to create ML models that solve complex problems, including tasks that only a few years ago could have been done solely by humans - and many, at that. Creating and implementing a model is now so frictionless that you can go from zero to hero in hours. However, as with most sprawling software ecosystems, as the barrier for entry lowers, the barrier to secure it rises.

As is often the case with significant technological advancements, we create, design, and build in a flurry, then gradually realize how the technology can be misused, abused, or attacked. With how easily ML can be harnessed and the depth to which the technology has been woven into our lives, we have to ask ourselves a few tricky questions:

- Could someone attack, disrupt or manipulate critical ML models?

- What are the potential consequences of an attack on an ML model?

- Are there any security controls in place to protect against attack?

And perhaps most crucially:

- Could you tell if you were under attack?

Depending on the criticality of the model and how an adversary could attack it, the consequences of an attack can range from unpleasant to catastrophic. As we increasingly rely on ML-powered solutions, the attacks against ML models - known broadly as adversarial machine learning (AML) - are becoming more pervasive now than ever.

What is an Adversarial Machine Learning attack?

An adversarial machine learning attack can take many forms, from a single pixel placed within an image to produce a wrong classification to manipulating a stock trading model through data poisoning or inference for financial gain. Adversarial ML attacks do not resemble your typical malware infection. At least, not yet - we’ll explore this later!

Image source: https://github.com/Hyperparticle/one-pixel-attack-keras

Adversarial ML is a relatively new, cutting-edge frontier of cybersecurity that is still primarily in its infancy. Research into novel attacks that produce erroneous behavior in models and can steal intellectual property is only on the rise. An article on the technology news site VentureBeat states that in 2014 there were zero papers regarding adversarial ML on the research sharing repository Arxiv.org. As of 2020, they record this number as an approximate 1,100. Today, there are over 2,000.

The recently formed MITRE - ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems), made by the creators of MITRE ATT&CK, documents several case studies of adversarial attacks on ML production systems, none of which have been performed in controlled settings. It's worth noting that there is no regulatory requirement to disclose adversarial ML attacks at the time of writing, meaning that the actual number, while almost certainly higher, may remain a mystery. A publication that deserves an honorable mention is the 2019 draft of ‘A Taxonomy and Terminology of Adversarial Machine Learning’ by the National Institute of Standards and Technology (NIST). The content of which has proven invaluable in so far as to create a common language and conceptual framework to help define the adversarial machine learning problem space.

It's not just the algorithm

Since its inception, AML research has primarily focused on model/algorithm-centric attacks such as data poisoning, inference, and evasion - to name but a few. However, the attack surface has become even wider still. Instead of targeting the underlying algorithm, attackers are instead choosing to target how models are stored on disk, in-memory, and how they’re deployed and distributed. While ML is often touted as a transcendent technology that could almost be beyond the reach of us mere mortals, it’s still bound by the same constraints as any other piece of software, meaning many similar vulnerabilities can be found and exploited. However, these are often outside the purview of existing security solutions, such as anti-virus and EDR.

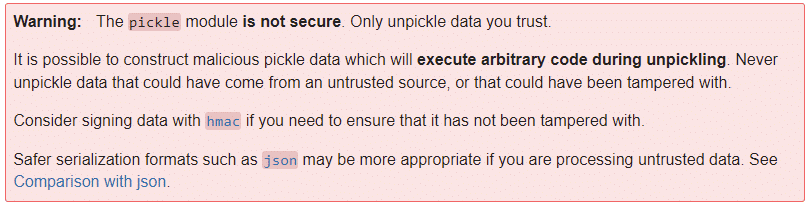

To illustrate this point, we need not look any further than the insecurity and abuse of the Pickle file format. For the uninitiated, Pickle is a serialized storage format which has become almost ubiquitous with the storage and sharing of pre-trained machine learning models. Researchers from TrailOfBits show how the format can execute malicious code as soon as a model is loaded using their open source tool called ‘Fickling’. This significant insecurity has been acknowledged since at least 2011, as per the Pickle documentation:

Considering that this has been a known issue for over a decade, coupled with the continued use and ubiquity of this serialization format, it makes the thought of an adversarial pickle a scary one.

Cost and consequence

The widespread adoption of ML, combined with the increasing level of responsibility and trust, dramatically increases the potential attack surface for adversarial attacks and possible consequences. Businesses across every vertical depend on machine learning for their critical business functions, which has led the machine learning market to an approximate valuation of over $100 billion, with estimates of up to multiple trillion by the year 2030. These figures represent an ever enticing target for cybercriminals and espionage alike.

The implications of an adversarial attack vary depending on the application of the model. For example, a model that classifies types of iris flowers will have a different threat model than a model that predicts heart disease based on a series of historical indicators. However, even with models that don't have a significant risk of ‘going wrong’, the model(s) you deploy may be your company's crown jewels. That same iris flower classifier may be your competitive advantage in the market. If it was to be stolen, you risk losing your IP and your advantage along with it. While not a fully comprehensive breakdown, the following image helps to paint a picture of the potential ramifications of an adversarial attack on an ML model:

But why now?

We've all seen news articles warning of impending doom caused by machine learning and artificial intelligence. It's easy to get lost in fear-mongering and can prove difficult to separate the alarmist from the pragmatist. Even reading this article, it’s easy to look on with skepticism. But we're not talking about the potential consequences of ‘the singularity’ here - HAL, Skynet, or the Cylons chasing a particular Battlestar will all agree that we're not quite there yet. We are talking about ensuring that security is taken into active consideration in the development, deployment, and execution of ML models, especially given the level of trust placed upon them.

Just as ML transitioned from a field of conceptual research into a widely accessible and established sector, it is now transitioning into a new phase, one where security must be a major focal point.

Conclusions

Machine learning has reached another evolutionary inflection point, where it has become more accessible than ever and no longer requires an advanced background in hard data science/statistics. As ML models become easier to deploy, use, and more commonplace within our programming toolkit, there is more room for security oversights and vulnerabilities to be introduced.

As a result, AML attacks are becoming steadily more prevalent. The amount of academic and industry research in this area has been increasing, with more attacks choosing not to focus on the model itself but on how it is deployed and implemented. Such attacks are a rising threat that has largely gone under the radar.

Even though AML is at the cutting edge of modern cybersecurity and may not yet be as household a name as your neighborhood ransomware group, we have to ask the question: when is the best time to defend yourself from an attack, before or after it’s happened?

About HiddenLayer

HiddenLayer helps enterprises safeguard the machine learning models behind their most important products with a comprehensive security platform. Only HiddenLayer offers turnkey AI/ML security that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Founded in March of 2022 by experienced security and ML professionals, HiddenLayer is based in Austin, Texas, and is backed by cybersecurity investment specialist firm Ten Eleven Ventures. For more information, visit www.hiddenlayer.com and follow us on LinkedIn or Twitter.

The Machine Learning Adversary Lifecycle

Your Attack Surface Just Got a Whole Lot Bigger

Summary

Understanding and mitigating security risks in machine learning (ML) and artificial intelligence (AI) is an emerging field in cybersecurity, with reverse engineers, forensic analysts, incident responders, and threat intelligence experts joining forces with data scientists to explore and uncover the ML/AI threat landscape. Key to this effort is describing the anatomy of attacks to stakeholders, from CISOs to MLOps and cybersecurity practitioners, helping organizations better assess risk and implement robust defensive strategies.

This blog explores the adversarial machine learning lifecycle from both an attacker’s and defender’s perspective. It aims to raise awareness of the types of ML attacks and their progression, as well as highlight security considerations throughout the MLOps software development lifecycle (SDLC). Knowledge of adversarial behavior is crucial to driving threat modeling and risk assessments, ultimately helping to improve the security posture of any ML/AI project.

A Broad New Attack Surface

Over the past few decades, machine learning (ML) has been increasingly utilized to solve complex statistical problems involving large data sets across various industries, from healthcare and fintech to cyber-security, automotive, and defense. Primarily driven by exponential growth in storage and computing power and great strides in academia, challenges that seemed insurmountable just a decade ago are now routinely and cost-effectively solved using ML. What began as academic research has now blossomed into a vast industry, with easily accessible libraries, toolkits, and APIs lowering the barrier of entry for practitioners. However, as with any software ecosystem, as soon as it gains sufficient popularity, it will swiftly attract the attention of security researchers and hackers alike, who look to exploit weaknesses, sometimes for fun, sometimes for profit, and sometimes for far more nefarious purposes.

To date, most adversarial ML/AI research has focused on the mathematical aspect, making algorithms more robust in handling malicious input. However, over the past few years, more security researchers have begun exploring ML algorithms and how models are developed, maintained, packaged, and deployed, hunting for weaknesses and vulnerabilities across the broader software ecosystem. These efforts have led to the frequent discovery of many new attack techniques and, in turn, a greater understanding of how practical attacks are performed against real-world ML implementations. Lately, it has been possible to take a more holistic view of ML attacks and devise comprehensive threat models and lifecycles, somewhat akin to the Lockheed Martin cyber kill chain or MITRE ATT&CK framework. This crucial undertaking has allowed the security industry to better assess and quantify the risks associated with ML and develop a greater understanding of how to implement mitigation strategies and countermeasures.

Adversary Tactics and Techniques - Know Your Craft

Understanding how practical attacks against machine learning implementations are conducted, the tactics and techniques adversaries employ, as well as performing simple threat modeling throughout the MLOps lifecycle, allows us to identify the most effective ways in which to implement countermeasures to perceived threats. To aid in this process, it is helpful to understand how adversaries perform attacks.

Launched in 2021, MITRE, in collaboration with organizations including Microsoft, Bosch, IBM, and NVIDIA, announced MITRE ATLAS, an “Adversarial Threat Landscape for Artificial-Intelligence Systems,” which provides a comprehensive knowledgebase of adversary tactics and techniques and a matrix outlining the progression of attacks throughout the attack lifecycle. It is an excellent introduction for MLOps and cybersecurity teams, and it is highly recommended that you peruse the matrix and case studies to familiarise yourself with practical, real-world attack examples.

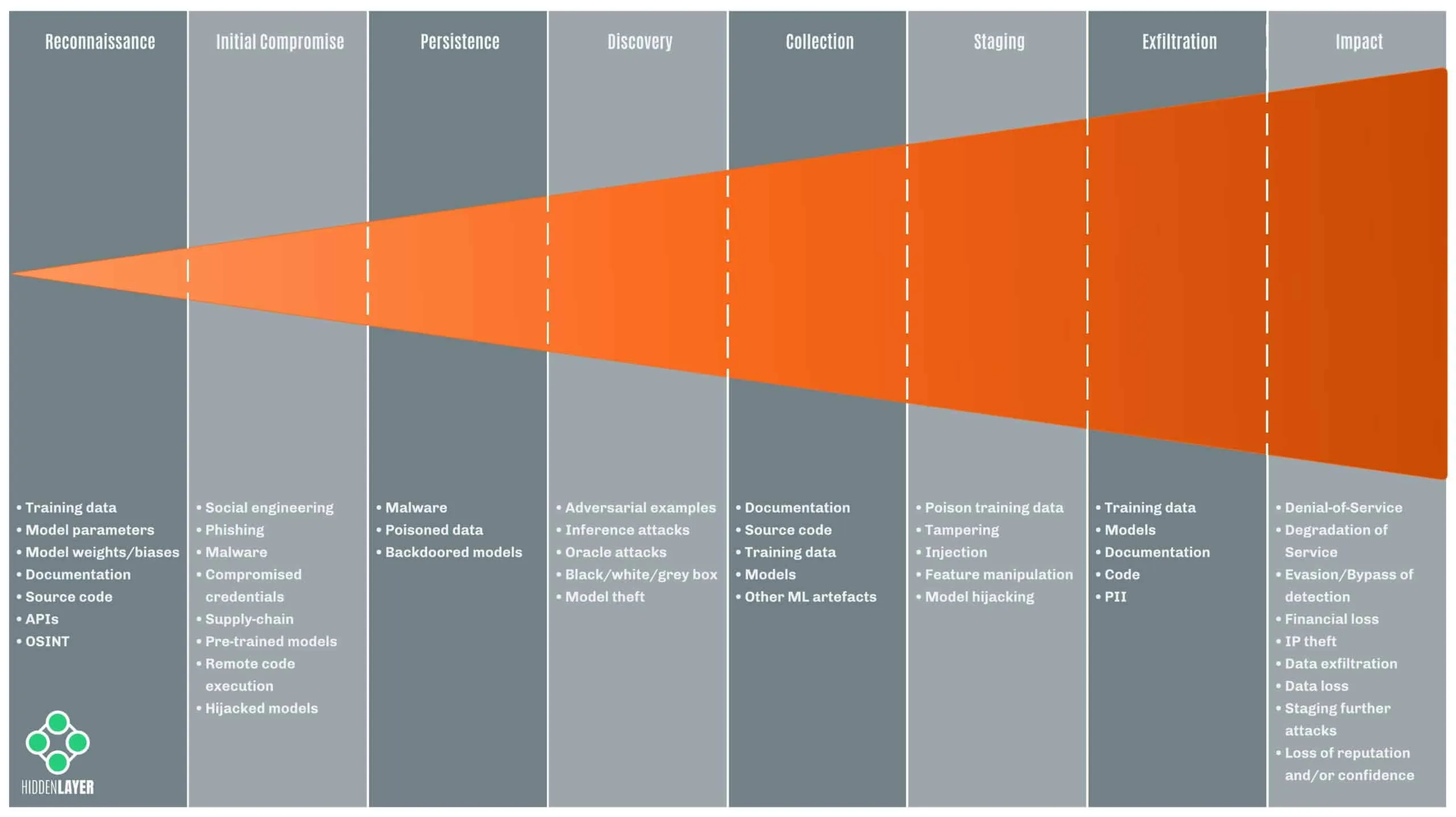

Adversary Lifecycle - An Attackers Perspective

The progression of attacks in the MITRE ATLAS matrix may look quite familiar to anyone with a cyber-security background, and that’s because many of the stages are present in more traditional adversary lifecycles for dealing with malware and intrusions. As with most adversary lifecycles, it is typically cheaper and simpler to disrupt attacks during the early phases rather than the latter, something that’s worth bearing in mind when threat modeling for MLOps.

Let’s give a breakdown of the most common stages of the machine learning adversary lifecycle and consider an attacker’s objectives. It is worth noting that attacks will usually comprise several of the following stages, but not necessarily all, depending on the techniques and motives of the attacker.

Reconnaissance

During the reconnaissance phase, an adversary typically tries to infer information about the target model, parameters, training set, or deployment. Methods employed include searching online publications for revealing information about models and training sets, reverse engineering/debugging software, probing endpoints/APIs, and social engineering.

Any attacks conducted at this stage are usually considered “black-box” attacks, with the adversary possessing little to no knowledge of the target model or systems and aiming to boost their understanding.

Information that an attacker gleans either actively or passively can be used to tailor subsequent attacks. As such, it is best to keep information about your models confidential and ensure that robust strategies are in place for dealing with malicious input at decision time.

Initial Compromise

During the initial compromise stage, an adversary is able to obtain access to systems hosting machine learning artifacts. This could be through traditional cyber-attacks, such as social engineering, deploying malware, compromising the software supply chain, edge-computing devices, compromised containers, or attacks against hardware and firmware. The attacker’s objectives at this stage could be to poison training data, steal sensitive information or establish further persistence.

Once an attacker has a partial understanding of either the model, training data, or deployment, they can begin to conduct “grey-box” attacks based on the information gleaned.

Persistence

Maintaining persistence is a term used to describe threats that survive a system reboot, usually through autorun mechanisms provided by the operating system. For ML attacks, adversaries can maintain persistence via poisoned data that persists on disk, backdoored model files, or code that can be used to tamper with models at runtime.

Discovery

Like the reconnaissance stage, when performing discovery, an attacker tries to determine information about the target model, parameters, or training data. Armed with access to a model, either locally or via remote API, “oracle attacks” can be performed to probe models to determine how they might have been trained and configured, determine if a sample was perhaps present in the training set, or to try and reconstruct the training set or model entirely.

Once an adversary has full knowledge of a target model, they can begin to conduct “white-box” attacks, greatly simplifying the process of hunting for vulnerabilities, generating adversarial samples, and staging further attacks. With enough knowledge of the input features, attackers can also train surrogate (or proxy) models that can be used to simulate white-box attacks. This has proven a reliable technique for generating adversarial samples to evade detection from cyber-security machine learning solutions.

Collection

During the collection stage, adversaries aim to harvest sensitive information from documentation and source code, as well as machine learning artifacts such as models and training data, aiming to elevate their knowledge sufficiently to start performing grey or white-box attacks.

Staging

Staging can be fairly broad in scope as it comprises the deployment of common malware and attack tooling alongside more bespoke attacks against machine learning models.

The staging of adversarial ML attacks can be broadly classified as train-time attacks and decision-time attacks.

Training-time attacks occur during the data processing, training, and evaluation stages of the MLOps development lifecycle. They may include poisoning datasets through injection, manipulation, and training substitute models for further “white-box” interrogation.

Decision-time (or inference-time) attacks occur during run-time as a model makes predictions. These attacks typically focus on interrogating models to determine information about features, training data, or hyperparameters used for tuning. However, tampering with models on disk or injecting backdoors into pre-trained models may also be a type of decision time attack. We colloquially refer to such attacks as model hijacking.

Exfiltration

In most adversary lifecycles, exfiltration refers to the loss/theft (or unauthorized copying/movement) of sensitive data from a device. In an adversarial machine learning setting, exfiltration typically involves the theft of training data, code, or models (either directly or via inference attacks against the model).

In addition, machine learning models may sometimes reveal secrets about their training data or even leak sensitive information/PII (potentially in breach of data protection laws and regulations), which adversaries may try to obtain through various attacks.

Impact

Where an adversary might have one specific endgame in mind, such as bypassing security controls, or theft of data, the overall impact to a victim might be pretty extensive, including (but certainly not limited to):

- Denial-of-Service

- Degradation of Service

- Evasion/Bypass of Detection

- Financial Gain/Loss

- Intellectual Property Theft

- Data Exfiltration

- Data Loss

- Staging Attacks

- Loss of Reputation/Confidence

- Disinformation/Manipulation of Public Opinion

- Loss of Life/Personal Injury

Understanding the likely endgames for an attacker with respect to relevant impact scenarios can help to define and adopt a robust defensive security posture that is conscientious of factors such as cost, risk, likelihood, and impact.

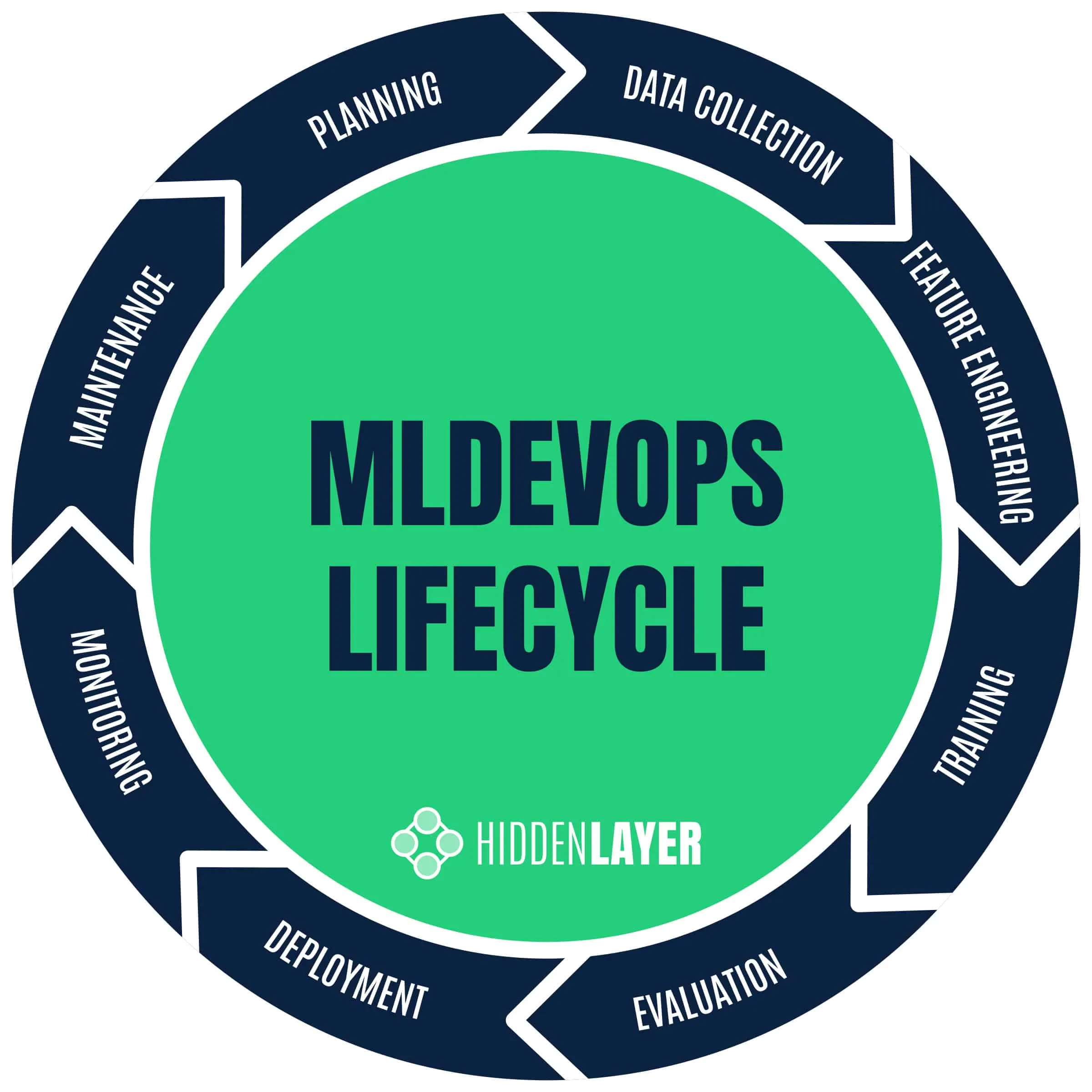

MLOps Lifecycle - A Defenders Perspective

For security practitioners, the machine learning adversary lifecycle is hugely beneficial as it allows us to understand the anatomy of attacks and implement countermeasures. When advising MLOps teams comprising data scientists, developers, and project managers, it can often help us to relate attacks to various stages of the MLOps development lifecycle. This context provides MLOps teams with a greater awareness of the potential pitfalls that may lead to security risks during day-to-day operations and helps to facilitate risk assessments, security auditing, and compliance testing for ML/AI projects.

Let’s explore some of the standard phases of a typical MLOps development lifecycle and highlight the critical security concerns at each stage.

Planning

From a security perspective, for any person or team embarking on a machine learning project of reasonable complexity, it is worth considering:

- Privacy legislation and data protection laws

- Asset management

- The trust model for data and documentation

- Threat modeling

- Adversary lifecycle

- Security testing and auditing

- Supply chain attacks

Although these topics might seem “boring” to many practitioners, careful consideration during project planning can help to highlight potential risks and serve as a good basis for defining an overarching security posture.

Data Collection, Processing & Storage

The biggest concern during the data handling phase is the poisoning of datasets, typically through poorly sourced training data, badly labeled data, or the deliberate insertion, manipulation, or corruption of samples by an adversary.

Ensuring training data is responsibly sourced and labeling efforts are verified, alongside role-based access controls (RBAC) and adopting the least privilege principle for data and documentation, will make it harder for attackers to obtain sensitive artifacts and poison training data.

Feature engineering

Feature integrity and resilience to tampering are the main concerns during feature engineering, with RBAC again playing an important factor in mitigating risk by ensuring access to documentation, training data, and feature sets are restricted to those with a need to know. In addition, understanding which features may be likely to lead to drift, bias, or even information leakage, can help to improve not only the security of the model (for example, by employing differential privacy techniques) but often results in higher accuracy models for less training and evaluation effort. A solid win all around!

Training

The training phase introduces many potential security risks, from using pre-trained models for transfer learning that may have been adversarially tampered with to vulnerable learning algorithms or the potential to train models that may leak or reveal sensitive information.

During the training phase, regardless of the origin of training data, it is also worth considering the use of robust learning algorithms that offer resilience to poisoning attacks, even when employing granular access controls and data sanitization methods to spot adversarial samples in training data.

Evaluation

After training, data scientists will typically spend time evaluating the model’s performance on external data, which is an ideal time in the lifecycle to perform security testing.

Attackers will ultimately be looking to reverse engineer model training data or tamper with input features to infer meaning. These attacks need to be preempted and robustness checked during model evaluation. Also, consider if there is any risk of bias or discrimination in your models, which might lead to poor quality predictions and risk of reputational harm if discovered.

Deployment

Deployment is another perilous phase of the lifecycle, where the model transitions from development into production. This introduces the possibility of the model falling into the hands of adversaries, raising the potential for added security risks from decision time attacks.

Adversaries will attempt to tamper with model input, infer features and training data, and probe for data leakage. The type of deployment (i.e., online, offline, embedded, browser, etc.) will significantly alter the attack surface for an adversary. For example, it is often far more straightforward for attackers to probe and reverse engineer a model if they possess a local copy rather than conducting attacks via a web API.

As attacks against models gain traction, “model hygiene” should be at the forefront of concerns, especially when dealing with pre-trained models from public repositories. Due to the lack of cryptographic signing and verification of ML artifacts, it is not safe to assume that publicly available models have not been tampered with. Tampering may introduce backdoors that can subvert the model at decision time or embed trojans used to stage further malware and attacks. To this end, running pre-trained models in a secure sandbox is highly recommended.

Monitoring

Decision-time attacks against models must be monitored, and appropriate actions taken in response to various classes of attack. Some responses might be automated, such as ignoring or rate limiting requests, while sometimes having a “human-in-the-loop” is necessary. This might be a security analyst or data scientist who is responsible for triaging alerts, investigating attacks, and potentially retraining models or implementing further countermeasures.

Maintenance

Conducting continuous risk assessment and threat modeling during model development and maintenance is crucial. Circumstances may change as projects grow, requiring a shift in security posture to cater for previous unforeseen risks.

Failing to Prepare is Preparing to Fail

It is always worth assuming that your machine learning models, systems, and maybe even people will be the target of attack. Considering the entire attack surface and effective countermeasures for large and complex projects can often be daunting. Still, thankfully some existing approaches can help to identify and mitigate risk effectively.

Threat Modelling

Sun Tzu most succinctly describes threat modeling:

“If you know the enemy and know yourself, you need not fear the result of a hundred battles.” - Sun Tzu, The Art of War.

In more practical terms, OWASP provides a great explanation of threat modeling (Threat Modeling | OWASP Foundation), providing a means to identify and mitigate risk throughout the software development lifecycle (SDLC).

Some key questions to ask yourself when performing threat modeling for machine learning software projects might include:

- Who are your adversaries, and what might their goals be?

- Could an adversary discover the training data or model?

- How might an attacker benefit from attacking the model?

- What might an attack look like in real life?

- What might an attack’s impact (and potential legal ramifications) be?

Again, careful consideration of these questions can help to identify security requirements and shape the overall security posture of an ML project, allowing for the implementation of diverse security mechanisms for identifying and mitigating potential threats.

Trust Model

In the context of a machine learning SDLC, trust models help to determine which stakeholders require access to information such as training data, feature sets, documentation and source code, etc. Employing granular role-based access controls for teams and individuals helps adhere to the principle of least privilege access, making life harder for adversaries to obtain and exfiltrate sensitive information and machine learning artifacts.

Conclusions

As AML becomes more deeply entwined with the broader cybersecurity ecosystem, the diverse skill-sets of veteran security practitioners’ will help us formalize methodologies and processes to better evaluate and communicate risk, research practical attacks, and, most crucially, provide new and effective countermeasures to detect and respond to attacks.

Everyone from CISOs and MLOps teams to cybersecurity stakeholders can benefit from having a high-level understanding of the adversarial machine learning attack matrix, lifecycles, and threat/trust models, which are crucial to improving awareness and bringing security considerations to the forefront of ML/AI production.

We look forward to publishing more in-depth technical research into adversarial machine learning in the near future and working closely with the ML/AI community to better understand and mitigate risk.

About HiddenLayer

HiddenLayer helps enterprises safeguard the machine learning models behind their most important products with a comprehensive security platform. Only HiddenLayer offers turnkey AI/ML security that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Founded in March of 2022 by experienced security and ML professionals, HiddenLayer is based in Austin, Texas, and is backed by cybersecurity investment specialist firm Ten Eleven Ventures. For more information, visit www.hiddenlayer.com and follow us on LinkedIn or Twitter.

In the News

HiddenLayer’s research is shaping global conversations about AI security and trust.

HiddenLayer Selected as Awardee on $151B Missile Defense Agency SHIELD IDIQ Supporting the Golden Dome Initiative

Underpinning HiddenLayer’s unique solution for the DoD and USIC is HiddenLayer’s Airgapped AI Security Platform, the first solution designed to protect AI models and development processes in fully classified, disconnected environments. Deployed locally within customer-controlled environments, the platform supports strict US Federal security requirements while delivering enterprise-ready detection, scanning, and response capabilities essential for national security missions.

Austin, TX – December 23, 2025 – HiddenLayer, the leading provider of Security for AI, today announced it has been selected as an awardee on the Missile Defense Agency’s (MDA) Scalable Homeland Innovative Enterprise Layered Defense (SHIELD) multiple-award, indefinite-delivery/indefinite-quantity (IDIQ) contract. The SHIELD IDIQ has a ceiling value of $151 billion and serves as a core acquisition vehicle supporting the Department of Defense’s Golden Dome initiative to rapidly deliver innovative capabilities to the warfighter.

The program enables MDA and its mission partners to accelerate the deployment of advanced technologies with increased speed, flexibility, and agility. HiddenLayer was selected based on its successful past performance with ongoing US Federal contracts and projects with the Department of Defence (DoD) and United States Intelligence Community (USIC). “This award reflects the Department of Defense’s recognition that securing AI systems, particularly in highly-classified environments is now mission-critical,” said Chris “Tito” Sestito, CEO and Co-founder of HiddenLayer. “As AI becomes increasingly central to missile defense, command and control, and decision-support systems, securing these capabilities is essential. HiddenLayer’s technology enables defense organizations to deploy and operate AI with confidence in the most sensitive operational environments.”

Underpinning HiddenLayer’s unique solution for the DoD and USIC is HiddenLayer’s Airgapped AI Security Platform, the first solution designed to protect AI models and development processes in fully classified, disconnected environments. Deployed locally within customer-controlled environments, the platform supports strict US Federal security requirements while delivering enterprise-ready detection, scanning, and response capabilities essential for national security missions.

HiddenLayer’s Airgapped AI Security Platform delivers comprehensive protection across the AI lifecycle, including:

- Comprehensive Security for Agentic, Generative, and Predictive AI Applications: Advanced AI discovery, supply chain security, testing, and runtime defense.

- Complete Data Isolation: Sensitive data remains within the customer environment and cannot be accessed by HiddenLayer or third parties unless explicitly shared.

- Compliance Readiness: Designed to support stringent federal security and classification requirements.

- Reduced Attack Surface: Minimizes exposure to external threats by limiting unnecessary external dependencies.

“By operating in fully disconnected environments, the Airgapped AI Security Platform provides the peace of mind that comes with complete control,” continued Sestito. “This release is a milestone for advancing AI security where it matters most: government, defense, and other mission-critical use cases.”

The SHIELD IDIQ supports a broad range of mission areas and allows MDA to rapidly issue task orders to qualified industry partners, accelerating innovation in support of the Golden Dome initiative’s layered missile defense architecture.

Performance under the contract will occur at locations designated by the Missile Defense Agency and its mission partners.

About HiddenLayer

HiddenLayer, a Gartner-recognized Cool Vendor for AI Security, is the leading provider of Security for AI. Its security platform helps enterprises safeguard their agentic, generative, and predictive AI applications. HiddenLayer is the only company to offer turnkey security for AI that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Backed by patented technology and industry-leading adversarial AI research, HiddenLayer’s platform delivers supply chain security, runtime defense, security posture management, and automated red teaming.

Contact

SutherlandGold for HiddenLayer

hiddenlayer@sutherlandgold.com

HiddenLayer Announces AWS GenAI Integrations, AI Attack Simulation Launch, and Platform Enhancements to Secure Bedrock and AgentCore Deployments

As organizations rapidly adopt generative AI, they face increasing risks of prompt injection, data leakage, and model misuse. HiddenLayer’s security technology, built on AWS, helps enterprises address these risks while maintaining speed and innovation.

AUSTIN, TX — December 1, 2025 — HiddenLayer, the leading AI security platform for agentic, generative, and predictive AI applications, today announced expanded integrations with Amazon Web Services (AWS) Generative AI offerings and a major platform update debuting at AWS re:Invent 2025. HiddenLayer offers additional security features for enterprises using generative AI on AWS, complementing existing protections for models, applications, and agents running on Amazon Bedrock, Amazon Bedrock AgentCore, Amazon SageMaker, and SageMaker Model Serving Endpoints.

As organizations rapidly adopt generative AI, they face increasing risks of prompt injection, data leakage, and model misuse. HiddenLayer’s security technology, built on AWS, helps enterprises address these risks while maintaining speed and innovation.

“As organizations embrace generative AI to power innovation, they also inherit a new class of risks unique to these systems,” said Chris Sestito, CEO and Co-Founder of HiddenLayer. “Working with AWS, we’re ensuring customers can innovate safely, bringing trust, transparency, and resilience to every layer of their AI stack.”

Built on AWS to Accelerate Secure AI Innovation

HiddenLayer’s AI Security Platform and integrations are available in AWS Marketplace, offering native support for Amazon Bedrock and Amazon SageMaker. The company complements AWS infrastructure security by providing AI-specific threat detection, identifying risks within model inference and agent cognition that traditional tools overlook.

Through automated security gates, continuous compliance validation, and real-time threat blocking, HiddenLayer enables developers to maintain velocity while giving security teams confidence and auditable governance for AI deployments.

Alongside these integrations, HiddenLayer is introducing a complete platform redesign and the launches of a new AI Discovery module and an enhanced AI Attack Simulation module, further strengthening its end-to-end AI Security Platform that protects agentic, generative, and predictive AI systems.

Key enhancements include:

- AI Discovery: Identifies AI assets within technical environments to build AI asset inventories

- AI Attack Simulation: Automates adversarial testing and Red Teaming to identify vulnerabilities before deployment.

- Complete UI/UX Revamp: Simplified sidebar navigation and reorganized settings for faster workflows across AI Discovery, AI Supply Chain Security, AI Attack Simulation, and AI Runtime Security.

- Enhanced Analytics: Filterable and exportable data tables, with new module-level graphs and charts.

- Security Dashboard Overview: Unified view of AI posture, detections, and compliance trends.

- Learning Center: In-platform documentation and tutorials, with future guided walkthroughs.

HiddenLayer will demonstrate these capabilities live at AWS re:Invent 2025, December 1–5 in Las Vegas.

To learn more or request a demo, visit https://hiddenlayer.com/reinvent2025/.

About HiddenLayer

HiddenLayer, a Gartner-recognized Cool Vendor for AI Security, is the leading provider of Security for AI. Its platform helps enterprises safeguard agentic, generative, and predictive AI applications without adding unnecessary complexity or requiring access to raw data and algorithms. Backed by patented technology and industry-leading adversarial AI research, HiddenLayer delivers supply chain security, runtime defense, posture management, and automated red teaming.

For more information, visit www.hiddenlayer.com.

Press Contact:

SutherlandGold for HiddenLayer

hiddenlayer@sutherlandgold.com

HiddenLayer Joins Databricks’ Data Intelligence Platform for Cybersecurity

On September 30, Databricks officially launched its <a href="https://www.databricks.com/blog/transforming-cybersecurity-data-intelligence?utm_source=linkedin&utm_medium=organic-social">Data Intelligence Platform for Cybersecurity</a>, marking a significant step in unifying data, AI, and security under one roof. At HiddenLayer, we’re proud to be part of this new data intelligence platform, as it represents a significant milestone in the industry's direction.

On September 30, Databricks officially launched its Data Intelligence Platform for Cybersecurity, marking a significant step in unifying data, AI, and security under one roof. At HiddenLayer, we’re proud to be part of this new data intelligence platform, as it represents a significant milestone in the industry's direction.

Why Databricks’ Data Intelligence Platform for Cybersecurity Matters for AI Security

Cybersecurity and AI are now inseparable. Modern defenses rely heavily on machine learning models, but that also introduces new attack surfaces. Models can be compromised through adversarial inputs, data poisoning, or theft. These attacks can result in missed fraud detection, compliance failures, and disrupted operations.

Until now, data platforms and security tools have operated mainly in silos, creating complexity and risk.

The Databricks Data Intelligence Platform for Cybersecurity is a unified, AI-powered, and ecosystem-driven platform that empowers partners and customers to modernize security operations, accelerate innovation, and unlock new value at scale.

How HiddenLayer Secures AI Applications Inside Databricks

HiddenLayer adds the critical layer of security for AI models themselves. Our technology scans and monitors machine learning models for vulnerabilities, detects adversarial manipulation, and ensures models remain trustworthy throughout their lifecycle.

By integrating with Databricks Unity Catalog, we make AI application security seamless, auditable, and compliant with emerging governance requirements. This empowers organizations to demonstrate due diligence while accelerating the safe adoption of AI.

The Future of Secure AI Adoption with Databricks and HiddenLayer

The Databricks Data Intelligence Platform for Cybersecurity marks a turning point in how organizations must approach the intersection of AI, data, and defense. HiddenLayer ensures the AI applications at the heart of these systems remain safe, auditable, and resilient against attack.

As adversaries grow more sophisticated and regulators demand greater transparency, securing AI is an immediate necessity. By embedding HiddenLayer directly into the Databricks ecosystem, enterprises gain the assurance that they can innovate with AI while maintaining trust, compliance, and control.

In short, the future of cybersecurity will not be built solely on data or AI, but on the secure integration of both. Together, Databricks and HiddenLayer are making that future possible.

FAQ: Databricks and HiddenLayer AI Security

What is the Databricks Data Intelligence Platform for Cybersecurity?

The Databricks Data Intelligence Platform for Cybersecurity delivers the only unified, AI-powered, and ecosystem-driven platform that empowers partners and customers to modernize security operations, accelerate innovation, and unlock new value at scale.

Why is AI application security important?

AI applications and their underlying models can be attacked through adversarial inputs, data poisoning, or theft. Securing models reduces risks of fraud, compliance violations, and operational disruption.

How does HiddenLayer integrate with Databricks?

HiddenLayer integrates with Databricks Unity Catalog to scan models for vulnerabilities, monitor for adversarial manipulation, and ensure compliance with AI governance requirements.

Get all our Latest Research & Insights

Explore our glossary to get clear, practical definitions of the terms shaping AI security, governance, and risk management.

Thanks for your message!

We will reach back to you as soon as possible.